For this special history guide, we are going to take a trip back in time to see where the seed of Linux was planted — namely via the Unix systems of the early 1970s and how it has progressed through the modern day. Though most are completely unaware of the enormous impact that Unix-like operating systems have planted on our society, understanding its storied history can allow us to realize why the Unix model has lived on far longer and become more successful than any other operating system architecture (and philosophy) in existence.

In fact, the estimated 5 billion people in the world (more than half the population) to own a mobile phone have been using Unix-based operating systems, knowingly or not, since the “smart” phone hit the consumer shelves in the late 2000s. From the Linux-based Android platform to the BSD-flavored iOS, Unix has stolen the massive mobile market along with the majority of other systems in existence. In fact, if you look at the operating system on just about any device besides the desktop PC, it is more likely than not that it runs some form or derivative of Unix.

So, how did an operating system written to port a game from one machine to another gain so much prominence in our world today when it was first conceived and implemented over sixty years ago? Well, our journey begins at AT&T’s famous Bell Laboratory with two unlikely heroes that helped kick off the modern technological age. Strap in and grab some popcorn, this is going to be a wild ride!

The Early Years

The year is 1969. The counterculture revolution in America (and worldwide) reaches it’s peak with the Woodstock Music and Arts Festival in Bethel, NY with famous music artists like Jimi Hendrix, Jefferson Airplane, The Who, Crosby Stills Nash and Young, and the Grateful Dead taking part in the over half a million person peaceful gathering. Anti-war protests rattle the country over the United States’ military occupation of Vietnam, causing the country to become even more divided. The Cold War was brimming to a boiling point. However, even with all of this going on, the U.S. had also accomplished the unthinkable — landing a man on the moon for the first time in human history. There was no doubt that the 1970s would start on a groovy note, however, you likely won’t read about many of the most important technological innovations of the time in your average history class.

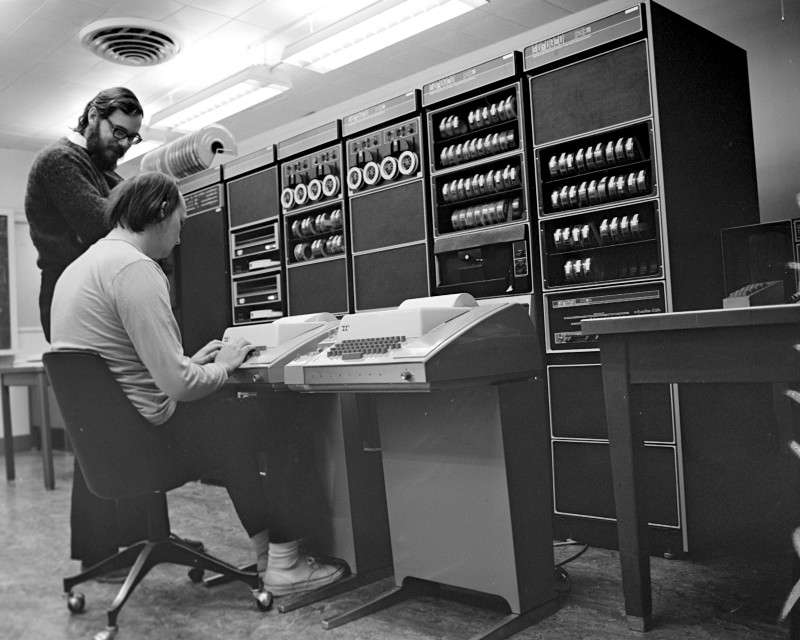

In 1969, an engineer and systems expert at AT&T’s Bell Laboratories found himself without work (and quite bored) as the project that he and his team had been working on, the Multics (Multiplexed Information and Computing Service) time-sharing operating system, had crumbled before his eyes. In the time planning his next pursuit, Ken Thompson found himself creating and playing computer games on the company’s expensive GE 635 mainframe, which he had access to from working on Multics. One of the games in particular, Space Travel, was loved by Ken, and who could blame him with all that was going on in the world at the time regarding the endless possibilities of outer space?

However, fearing that his use of the mainframe would be looked down upon by his superiors, Ken decided to attempt to port the game to an older computer he had found in Bell Labs, a Digital Equipment Corporation (DEC) PDP-7 that was basically left abandoned as it was already considered an obsolete machine at the time. In order to port the game to the more efficient and less expensive PDP-7, Ken designed his own operating system, which drew heavy inspiration from the Multics project. While still having access to the Multics environment, Ken began writing a new file and paging system on it.

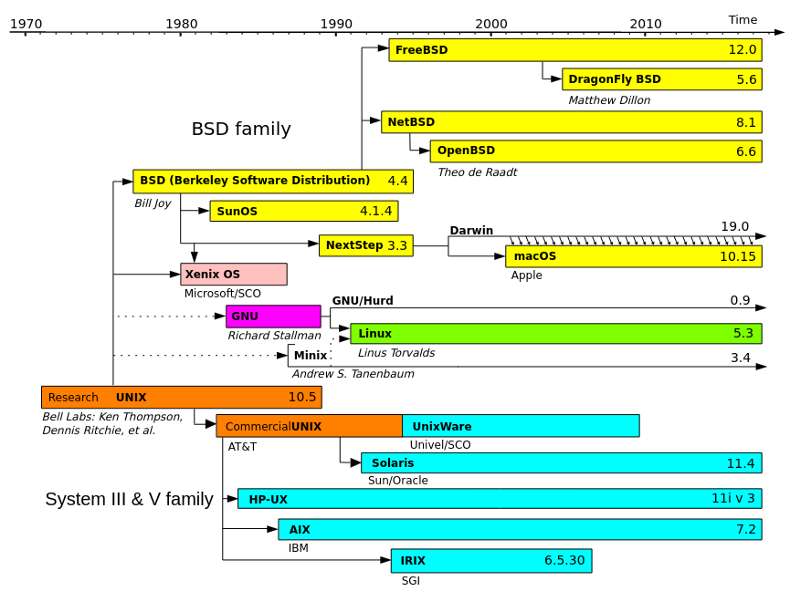

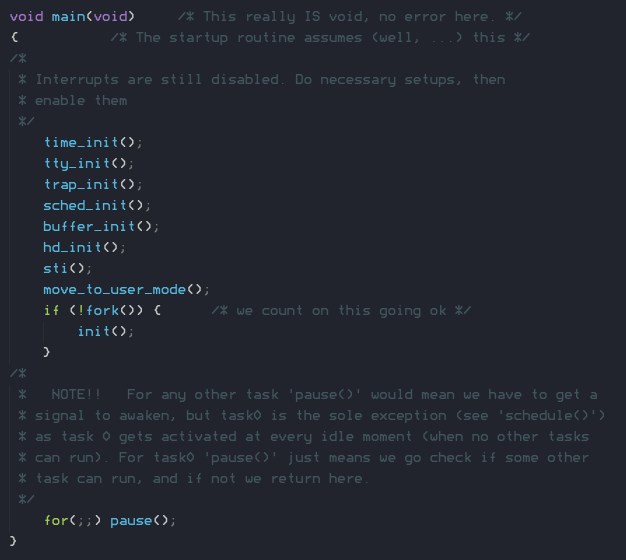

By the time late 1969 had rolled around, Thompson, along with former Multics colleague, Dennis Ritchie, built a team of Bell Labs engineers to design a simpler and more efficient version of Multics, which included a hierarchical file system, computer processes, device files, a command-line interpreter, and some smaller utility programs. This new operating system would form the base of what would eventually become the first version of Unix, released in 1970. In programmer humor, the operating system was first named Unics (pronounced “eunuchs”) for Uniplexed Information and Computing Service — a pun on the Multics acronym. No one actually remembers how the final name came to be Unix (Copyrighted by AT&T as UNIX).

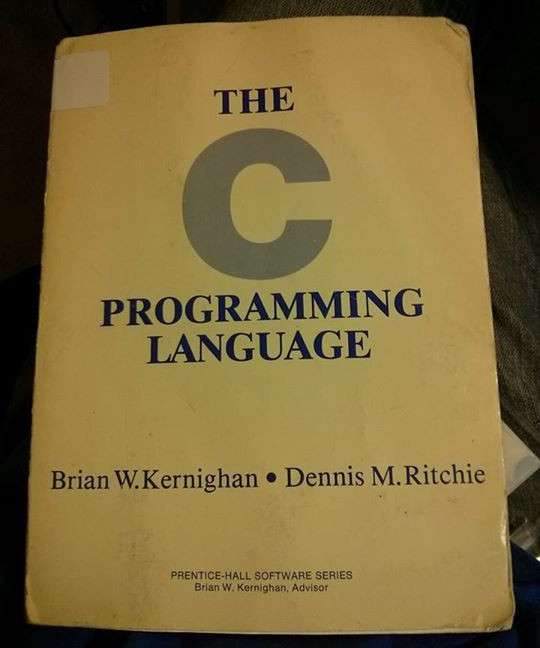

In a period of about three years, Unix underwent multiple changes and three rewrites, resulting in Version 1, Version 2, and Version 3. In 1973, Dennis Ritchie’s C programming language compiler had become powerful enough to start developing critical software in it. With another complete rewrite of the Unix system (Version 4), C became the heart of Unix and remains one of the most prolific programming languages in history, is still heavily used today, and is essential for building diverse types of software. In fact, Unix and C became so tightly intertwined with each other, that later developments included shaping the operating system and programming language in specific ways to fit each others needs. By the time Version 4 was released, Unix had spread throughout Bell Labs like wildfire.

In 1973, Unix made its first appearance into the world outside of Bell Labs as Ken Thompson and Dennis Ritchie spoke about the system in a presentation at the Symposium on Operating System Principles. Later that year, after many requests from outside organizations to use the system, Version 5 was released via special licensing by AT&T to academic institutions throughout the United States. Version 6 debuted in 1975 and became the first commercially available Unix system.

However, when Unix found its way to the University of California, Berkeley in 1974, things would take a dramatic turn in the Unix world. Bob Fabry, a highly-acclaimed computer science professor at the institution requested Unix Version 4 after seeing it presented at the Symposium the year before. When Ken Thompson took a sabbatical from Bell Labs and wound up at Berkeley’s computer lab (his alma mater), he helped to install Unix Version 6 on a new PDP-11/70 and began working on a Pascal implementation for the system, which was in turn improved upon by two graduate students, Chuck Haley and Bill Joy. Due to interest from other universities, Joy ended up compiling the first Berkeley Software Distribution (1BSD), which was an addition to Unix Version 6, not a complete operating system by itself, and released it in March 1978, sending out approximately thirty copies.

In 1978, the final version of Research Unix at Bell Labs to see mainstream use was released as Version 7. Due to the collaborative nature of the Unix system, many different sections of Bell Labs began altering the source code for their own needs and use cases, resulting in a plethora of different in-house Unix versions including CB UNIX, MERT, and PWB.

Unix began to gain prominence in commercial organizations when the top engineers and computer science graduates were hired by rising technology companies and brought the operating system with them. Due to the flexibility, portability, and power of Unix, many thought that it would become the universal operating system, partly due to the fact of its implementation in C, which allowed it to outlive specific hardware architectures in a way that operating systems written in specific assembler languages could not.

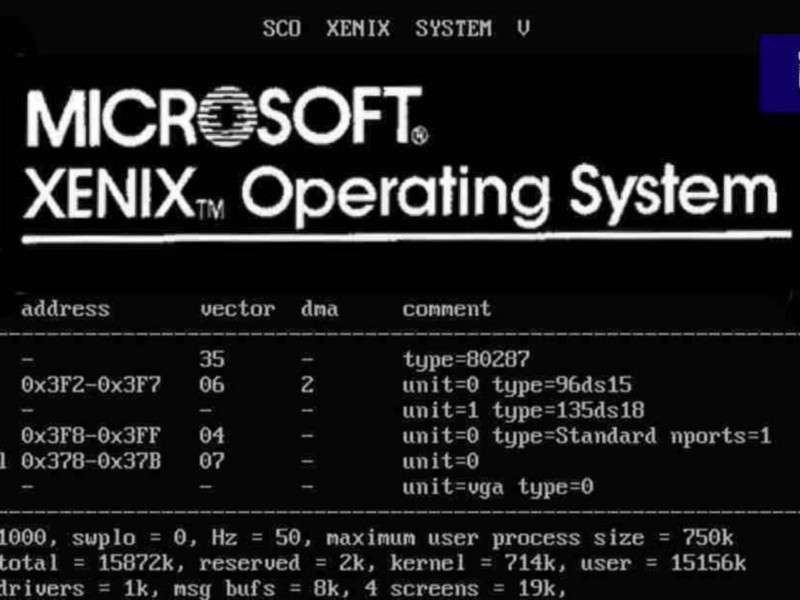

A myriad of commercial versions of Unix began to appear in the early 1980’s including Onyx System’s Microcomputer Unix and Santa Cruz Operation (SCO) and Microsoft’s Xenix. In 1981, Bell Labs released a new version called UNIX System III, that was based on it’s predecessor, Version 7. In the backlash of the anti-trust case that AT&T lost against the U.S. Department of Justice, AT&T moved quickly to attempt to push out their seminal Unix system against all competitors.

In 1983, the company was tired of the confusion between all of their internal versions of Unix and merged them into the what became known as UNIX System V (pronounced “System Five”). In addition to the software included in those flavors, System V also picked up some software from BSD, most notably the vi text editor and curses. Also in 1983 — Richard Stallman founded the GNU Project in retaliation to AT&T’s newer commercial UNIX licensing terms. The GNU Project believed in freedom to use a computer however one would like, including the software available. They began rewriting many popular Unix programs in order to bypass the UNIX copyright and distribute the system to anyone for free.

From 1979 to 1983, Bill Joy and company continued work on the BSD system, releasing versions 2BSD, 3BSD, 4BSD, and 4.1BSD. During this time-frame, he also founded what would become a tech powerhouse in Sun Microsystems, which created a BSD variant known as SunOS for their workstations. In order to oversee the development of 4.2BSD, Duane Adams of DARPA formed a committee including Bob Fabry, Bill Joy, Sam Leffler, Rick Rashid, and Dennis Ritchie, among other high-profile computer scientists. Version 4.2BSD hit the scene in August 1983 and became the first version released since Bill Joy left for Sun Microsystems. In turn, Mike Karels and Marshall Kirk McKusick became the BSD project leaders.

The latter half of the 1980s saw Unix become popular outside of academic and technical circles. It became viable in unexpected places like large commercial installations and mainframe computers. Though on the surface, the Unix world may have seemed exciting and innovative, there was a large storm brewing that would turn into what is now known as the Unix Wars, which lasted from the late 1980’s until the mid 1990’s. This war would considerably threaten the viability of Unix and Unix-based systems (like BSD) to break into the mainstream culture.

One of the main stokes of the fire was the lack of standardization within the Unix community — with different distributions like System V, BSD, unique combinations of the two, and Xenix (based on System III), it was a project divided. To rectify this problem, AT&T issued its own standard, the System V Interface Definition (SVID) in 1985 and required compliance for operating systems to use the System V brand.

However, another standardization effort (based on SVID) was released by the Institute of Electrical and Electronics Engineers (IEEE) in 1988, known as the Portable Operating System Interface (POSIX) specification. POSIX was designed as a compromise between System V and BSD and was eventually recognized and implemented for all Unix systems utilized in the United States government, gaining heavy favor and popularity.

In response, AT&T began to push the envelope even further by first collaborating with Santa Cruz Operation to merge System V and Xenix into System V/386. After that, AT&T joined forces with Sun Microsystems to merge their 4.2BSD derivative, SunOS, with System V and Xenix. The result became the major and comprehensive version known as UNIX System V Release 4 (SVR4). In retaliation, the Open Software Foundation was formed from the sponsorship of what is known as “The Seven” — Apollo Computer, Groupe Bull, Digital Equipment Corporation, Hewlett-Packard, IBM, Nixdorf Computer, and Siemens AG — and began to work on its own Unix unification system, known as OSF/1.

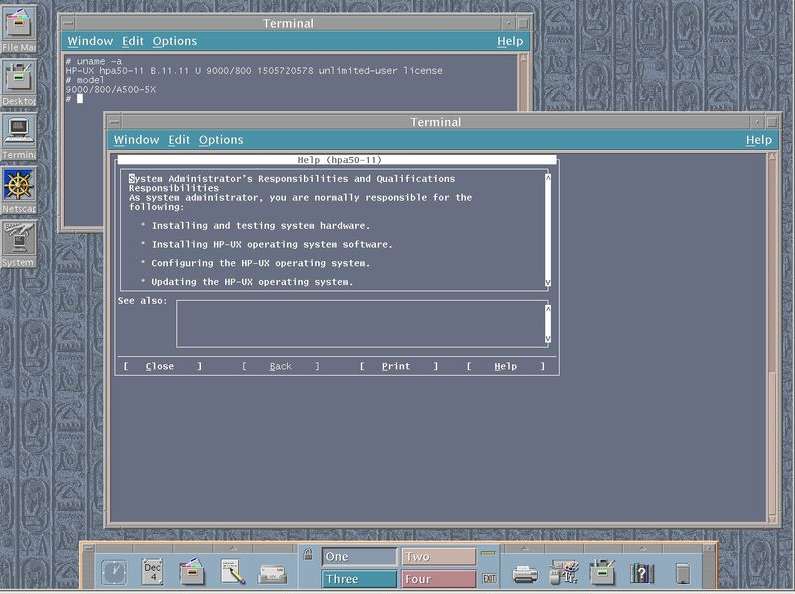

However, even with the attempt at unification by AT&T, Sun Microsystems, and SCO, other major tech companies began basing their own Unix systems off of System V Release 4 with BSD functionalities. Some other commercial versions based on System V include Sun Microsystem’s Solaris, HP’s HP-UX, IBM’s AIX, DEC’s Ultrix, Novell’s UnixWare ,and Cray’s UNICOS for supercomputers. In 1988, Silicon Graphics (SGI) released their IRIX operating system that was based on System V with some BSD extensions for the company’s proprietary Microprocessor without Interlocked Pipeline Stages (MIPS) workstations and servers. This would heavily contribute to SGI’s successes in the graphical computing field, with the most advanced system at the time. In fact, IRIX became one of the most mainstream and popularized commercial versions of Unix during its time due to the quality and cost of SGI’s hardware and the growing interest in digital graphics for video and film.

Another popular Unix-like operating system popped up in 1987, known as MINIX, which was designed by Andrew Stuart Tanenbaum for computer science educational purposes and made popular by its companion textbook, Operating Systems: Design and Implementation. However, MINIX deviated further from UNIX than most and it was only considered Unix-like, not Unix-based. The kernel and code was written from scratch using a microkernel architecture, instead of the monolithic kernel found in many Unix and BSD systems of the time. Little did Tanenbaum know, his creation of MINIX would change the history of Unix forever.

While working at Unisys in 1987, linguist-turned-programmer, Larry Wall, became irritated with the lack of a proper text processing language that had the flexibility and ease of use of the popular awk tool and shell scripts with the performance, portability, and power of the C programming language. To solve his issues, Wall hacked together a simple domain specific language known as the “Practical Extraction and Reporting Language”, or Perl for short.

Wall became interested by the free software movement that was picking up steam, in part due to Richard Stallman and the GNU Project, and saw what the power of releasing source code into the wild for free could provide to a project. Therefore, Wall released Perl into the Unix world, where it quickly blew up and soon became an essential tool in every Unix administrator, developer, or user’s toolbox.

As Perl was developed out in the open with hundreds of developers from all around the world, it grew from a domain specific language into a general purpose programming language. Even today, Perl is one of the first software packages installed in nearly every Unix-like system in existence due to the powerful flexibility and ease of use it provides in bootstrapping large software projects (like the Unix kernel itself).

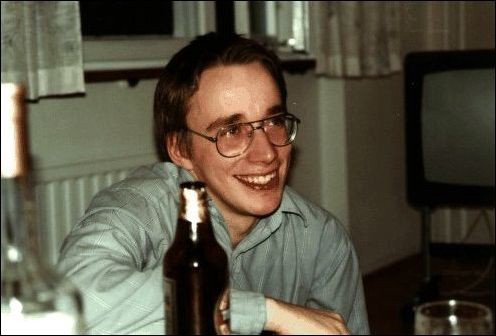

In the shadows of the Unix Wars, along with the rise of the Free Software Movement (spearheaded by Richard Stallman with the GNU Project and Larry Wall with Perl), a young computer science graduate student from the University of Helinski would forever alter the course of history after releasing the source code for a free Unix-like kernel — partially inspired by the MINIX system he had learned and used in school — into the free software world in September 1991. His name was Linus Torvalds, and his kernel — Linux.

The Rise of Linux

“Hello everybody out there using minix –

I’m doing a (free) operating system (just a hobby, won’t be big and professional like gnu) for 386(486) AT clones. This has been brewing since april, and is starting to get ready. I’d like any feedback on things people like/dislike in minix, as my OS resembles it somewhat (same physical layout of the file-system (due to practical reasons) among other things).

I’ve currently ported bash(1.08) and gcc(1.40), and things seem to work. This implies that I’ll get something practical within a few months, and I’d like to know what features most people would want. Any suggestions are welcome, but I won’t promise I’ll implement them ????

Linus (torv…@kruuna.helsinki.fi)

P.S. Yes – it’s free of any minix code, and has a multi-threaded fs. It is NOT protable [portable] (uses 386 task switching etc), and it probably never will support anything other than AT-harddisks, as that’s all I have :-(.“

Those were the harmless, unassuming words of the young Linus Torvalds on the comp.os.minix Usenet newsgroup as he breathed the very first public mention of the Linux kernel into existence on August 25, 1991 (as a side note, I was also birthed in late August 1991, which just goes to prove it was a great month in human history XD).

Two months later, Linus (at 21 years of age) released the source code for Linux 0.0.1 to the world, effectively introducing a freely available Unix-like operating system kernel. It included important software from the GNU Project, including their rewrite of the Bourne shell, Bash (The Bourne-Again Shell), and the GNU C Compiler (later renamed GCC for the GNU Compiler Collection).

Originally, Linus didn’t care for the name Linux as he thought it sounded too egotistical and instead opted for the name Freax, a combination of the words “free”, “freak”, and “x” (to pay respect to its Unix roots). However, when Ari Lemmke, a volunteer system administrator at Helinski University of Technology, was asked to host Linus’ project on their FTP server, FUNET, he felt the name Freax wasn’t appropriate and named the project “Linux” anyways. Eventually, Linus consented to the new name.

At this point, the GNU Project had made an immense amount of progress under the leadership of Richard Stallman. Conceived and implemented in September 1983, Stallman’s vision was to create a completely free and open Unix-like operating system by writing the vital pieces of software that made up a Unix system completely from scratch. By the late 1980s, most of the GNU system was complete except for one (extremely important) piece–the kernel, which lies at the heart of any operating system. Stallman’s decision to leave the kernel for last was to ensure that it could be optimized to work with the rest of the GNU stack seamlessly and would resolve many compatibility issues with their other programs.

However, when looking at designing a kernel, the GNU Project decided to go a different route than the Unixes and BSDs. Instead of the popular monolithic architecture, the GNU Project decided to build what is known as a microkernel, which effectively splits up the kernel into smaller, distinct programs that work together to give the desired functionality.

The microkernel concept actually followed the Unix philosophy much more faithfully–do one thing and do it well. In addition, this architecture would allow for the different components of the kernel to be separated in such a way that if one piece failed, the entire kernel wouldn’t crash, allowing for much greater stability.

In fact, this idea of the microkernel versus monolithic kernel became a surprising area of contention among operating system developers at the time. Andrew Tanenbaum, the developer of the MINIX operating system (of which Linux was partially inspired by) was extremely vocal about the virtues of using a microkernel in place of a monolithic kernel and got into heated debates with Linus (who chose a monolithic design for Linux) about his architectural choices on that same Usenet newsgroup where Linux was first announced.

Tanenbaum went on to say that Linux would be nothing more than a hobby project due to its perceived limitations in this area, however, it wouldn’t take long for him to be proved wrong.

At this time, the Unix Wars continued to rage on. Due to the divide of talented developers between AT&T’s UNIX System V standard and the BSD project, the stability of Unix started to come into question. The developers began competing to launch new features, more efficient power solutions, and improved performance, which left little time for them to worry about the stability of their systems. Consequently, this race for dominance ultimately hurt the viability of both operating systems in the industrial and commercial sectors.

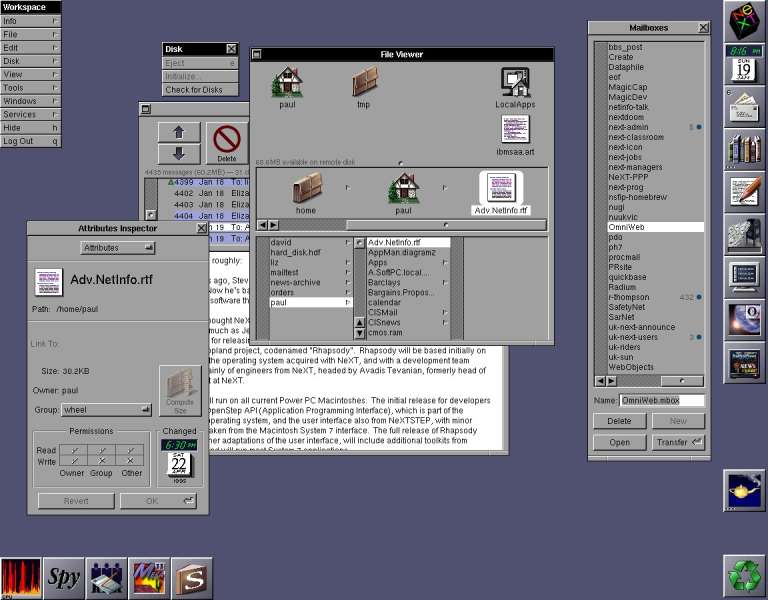

Out in Silicon Valley, another company began making waves with their Unix and BSD-based operating system. It started with the 1985 removal of Apple Computer, Inc. Co-Founder and CEO, Steve Jobs, by his own board members. Leaving Apple behind, Jobs moved on to his next venture by founding a new company called NeXT, in order to compete with his former employer. In the late 1980s, NeXT began developing their own proprietary operating system, NeXTSTEP, for their workstation computers. NeXTSTEP was built as a hybrid operating system and was based on Carnigie Mellon’s Mach microkernel with source code from BSD.

In the early 1990s, Keith Bostic, a well-known BSD developer, proposed to release sections of AT&T’s proprietary code under the same license as their previous Net/1 release. In order to get around AT&T’s commercial license, the BSD developers began refactoring the important utilities to become void of AT&T code. They also wrote the first version of the BSD license in 1988 that was one of the most permissive licenses of the time.

In June 1991, Net/2 was released and became the base upon which two ports of the operating system to Intel 80386 architecture grew out of–the free 386BSD and the proprietary BSD/OS. Berkeley Software Design Inc. (BSDi), a company founded by former UC Berkeley researchers and BSD contributors Donn Seely, Mike Karels, Bill Jolitz, and Trent Hein, sold BSD/OS licenses for professional support and ran into some legal troubles with AT&T’s Unix System Laboratories (USL) in 1992. USL was born out of the break up of AT&T’s telecommunications monopoly, which allowed the company to sell their UNIX software and support and retained the System V copyright and UNIX trademark.

Shortly after 386BSD was released, two of the most important independent BSDs became initially based off of it–NetBSD and FreeBSD. Both offshoots were created in response to the lackluster development community surrounding 386BSD, which ended up phasing out soon after NetBSD and FreeBSD were established in April and November of 1993, respectively.

An injunction on the sale and distribution of Net/2 (and BSD/OS) took effect until it could be determined if there were any copyright violations in BSDi’s source code. Consequently, a major halt on development of most BSD systems was put in place for nearly two years.

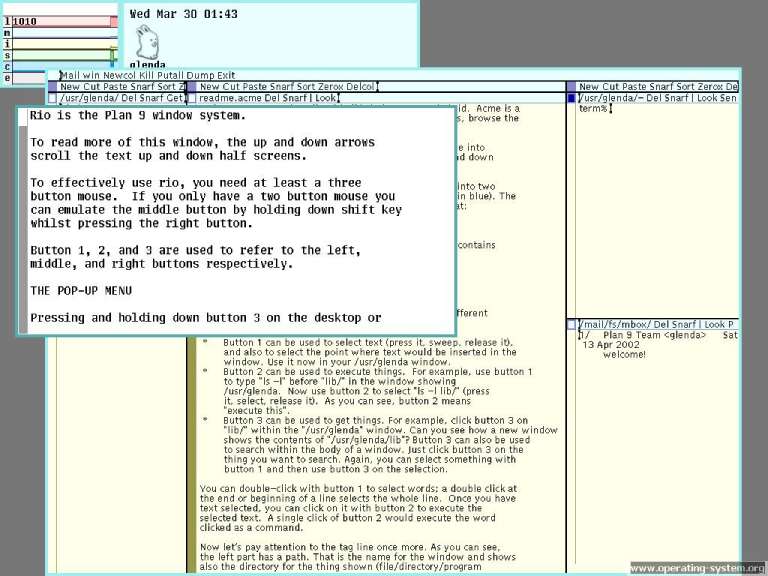

Meanwhile, at Bell Labs, the founders of Unix began working on a new research operating system in the late 1980s and early 1990s. Though Research Unix 7 became the last commercially available Research Unix system (and the predecessor to System V), the team at Bell Labs, including Ken Thompson, Dennis Ritchie, Rob Pike, Dave Presotto, Phil Winterbottom, and Brian Kernighan began experimenting with ways to improve the Unix architecture.

After working through Research Unix versions 8, 9, and 10, the Unix team at Bell Labs decided to take their ideas in a new direction and began work on the Plan 9 from Bell Labs (Plan 9 for short) operating system, which used Research Unix 10 for a starting point. The name was derived as a reference to the 1959 science fiction film, Plan 9 from Outer Space.

Plan 9 attempted to push the Unix philosophy of “everything is a file” even further with a network-centric filesystem and the replacement of the terminal I/O with a windowing system and graphical user interface known as Rio. In addition, the typical Unix shell was updated with the rc shell. Plan 9 was written in a special dialect of ANSI C and was heavily dependent on the use of complex input from the mouse (including “chording”) instead of the keyboard, like most Unix systems at the time.

On the other side of the world, Linus’ pet project, Linux, had gained a significant following with first tens, then hundreds of developers contributing to the source code. When Linux was first released, Linus had placed a more restrictive license than the GNU Project’s GNU General Public License (GPL) and didn’t allow for commercial activity to utilize Linux code in their proprietary software. However, Linus began to realize that a kernel wasn’t very useful to people on its own. In order to grow his operating system, Linus decided to re-license Linux under the GNU GPLv2 with the 0.12 release.

This proved to be one of the most important decisions made by Linus in the early days of Linux. After the re-licensing, GNU engineers began working on the Linux kernel in order to make it compatible with a wide range of extremely useful GNU software packages. This allowed for a fully functioning operating system to begin being distributed freely. In addition, the open nature of development allowed people from all over the world to contribute code to the Linux kernel, offering a diverse view on its architecture and functionality, and allowing for the code to improve and iterate much more quickly than operating systems built by single, proprietary teams.

This form of free and open source development had showed significant promise with the GNU Project and the non-profit organization that helped fund it, the Free Software Foundation (FSF), which was also founded by Richard Stallman in 1985. Many extremely talented software engineers (even those from large technology companies at the time) began volunteering their free time to improve and extend the functionality of the Linux kernel and the GNU software suite surrounding it.

One major advantage that the GPLv2 license had was that it required modifications of source code to be made available and pushed upstream to the Linux kernel itself upon request. This differed greatly from other popular free software licenses, like the BSD license, which basically allowed anyone to take and modify the source code as they saw fit with no return value to the upstream project itself. This allowed Linux to grow even more due to the companies who desired the technology contributing back to the project and growing ecosystem. Instead, BSD lost a lot of territory and possible improvements as corporations like NeXT (and later, Apple) took the BSD source code and turned it into their proprietary systems without so much as a nod.

The idea of a free and open Unix-like operating system became extremely important at this time with the massive licensing fees surrounding commercial UNIX systems as well as the legal issues plaguing BSD development. Simply put, Linux came precisely at the perfect time to take the world by storm. In fact, Linus has even commented that if the 386BSD or GNU’s multiserver microkernel, named the GNU Hurd, had been freely available and functional at the time, he wouldn’t have started work on Linux at all.

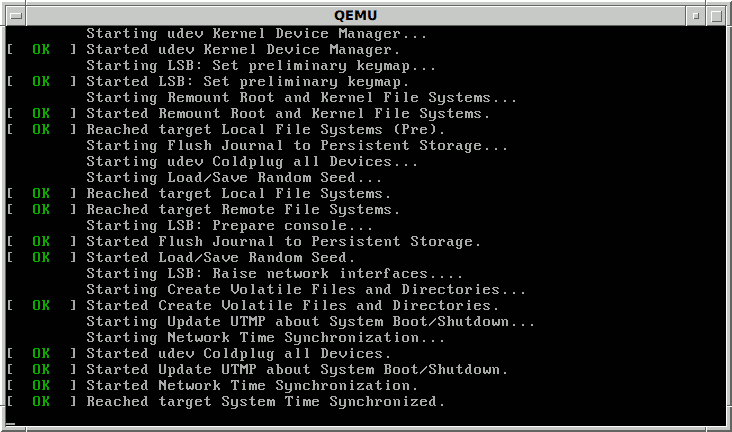

Early attempts by the GNU Project to make the Hurd a viable replacement kernel for a Unix-like system proved more difficult than originally thought due to some key architectural decisions by Stallman and company, including utilizing an advanced and, at the time, theoretical microkernel architecture utilizing the GNU Mach. However, even without their own functional kernel, the GNU Project and the Linux developers began to work very close and, in late 1991, the first Linux “distribution”, known as “Boot-root” hit the net.

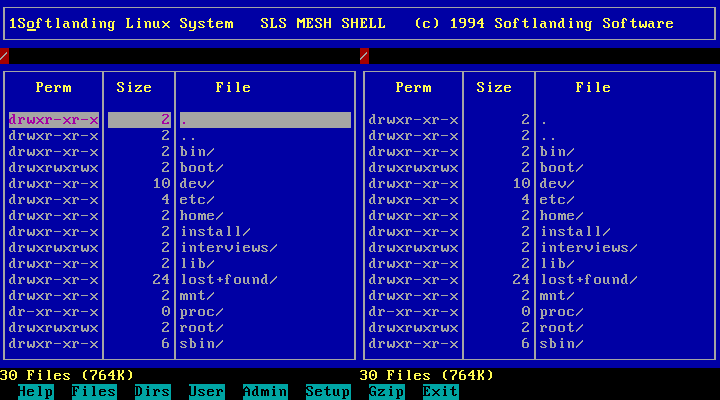

Boot-root took its name from the fact that the system consisted of two different floppy disk images (Boot & Root). One contained the Linux kernel itself for booting and installing the system and another for the GNU utilities and file system setup. However, the setup and installation of Boot-root wasn’t ideal and proved to be an extremely difficult process. To improve this process, other Linux distributions began popping up, such as MCC Interim Linux, Softlanding Linux System (SLS), and Yggdrasil Linux/GNU/X.

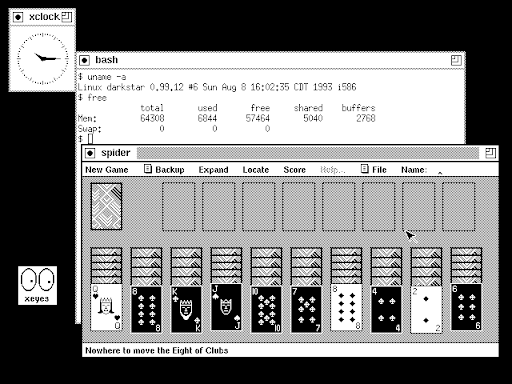

One of the most important events in early Linux history was the porting of the X Window System by Orest Zborowski in 1992, which allowed graphical user interface (GUI) support for the very first time. Without this extremely vital contribution, it is unlikely that Linux would have gained as much popularity and momentum as it did in such a short time span.

Of course, many corporations had already implemented proprietary Unix systems, so many software developers who became familiar with AT&T’s UNIX System V, Sun Microsystem‘s Solaris, IBM‘s AIX, Hewlett-Packard‘s HP-UX, Silicon Graphic‘s IRIX, Novell‘s Unixware, Cray‘s UNICOS and many more were eager to try out Linux as a free version of the software they loved.

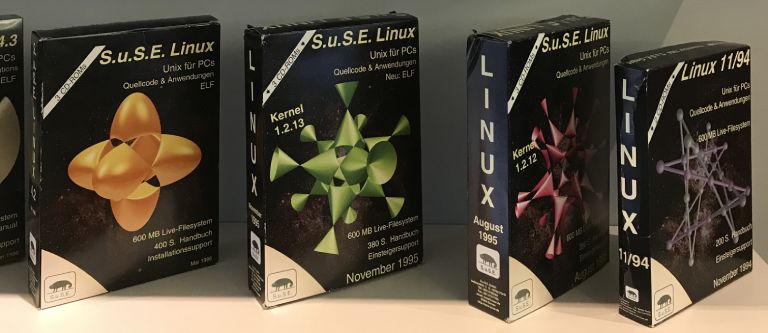

In September 1992, the world would be introduced to the first company offering Linux support. Roland Dyroff, Burchild Steinbild, Hubert Mantel, and Thomas Fehr founded the Software and Systems Development Corporation, which became known as S.u.S.E. (in German: Software- und System-Entwicklung). The company started out by providing professional support to companies using commercial Unixes while also distributing early copies of Linux based on SLS.

SLS became the most popular distribution at the time because it was the first to include more than just the Linux kernel, GNU software, and common utilities–it also included an early implementation of the X Window System, for example. In many ways, SLS could be considered the first Linux distribution by today’s standards.

However, not everyone was happy with SLS. In July 1993, Patrick Volkerding decided to rewrite part of the SLS codebase to forge his own Slackware distribution. Around the same time, Ian Murdock, who was also dissatisfied with SLS, set out to create Debian, which saw its first release a few months later in September. Both Slackware and Debian blew up in popularity very quickly among the small, but burgeoning open source software community. In fact, S.u.S.E. began selling its first commercial Linux product in 1994–a modified version of Slackware.

In March 1993, the Common Open Software Environment (COSE) initiative was formed by what is now know as the “Big Six” (or “SUUSHI”) of the commercial Unix world–Unix System Laboratories (AT&T), Univel, Sun Microsystems, Hewlett-Packard, IBM, and the Santa Cruz Operation in order to bring the Unix community together against what had become a Microsoft monopoly over the corporate desktop, which was quickly bleeding into the Unix realm of technical workstations and enterprise data centers.

This partnership was quite different from previous commercial Unix relationships of the past in that it wasn’t formed as a coalition against other Unix vendors and it was focused on creating standards for existing Unixes, rather than improving technology for commercial domination of the Unix market. The COSE initiative led to two positive outcomes for the Unix vendors: the Spec 1170 which took into account all of the OS interfaces used by the major Unix vendors of the time, and a shared X11-based user environment and graphical user interface based on the Motif widget toolkit named the Common Desktop Environment. A few months later, the COSE would reform Spec 1170 into the Single UNIX Specification, which utilized the popular POSIX standard at its core.

In 1993, the Univel initiative brought about the purchase of AT&T’s USL by Novell. This allowed Novell to acquire the rights to the UNIX operating system and release their own version of UNIX based on System V called UnixWare, which borrowed source code from their popular NetWare network operating system.

Consequently, the USL copyright lawsuit was inherited by Novell and the company pushed to resolve the matter against the BSD codebase (backed by UC Berkeley and BSDi) in January 1994. The official ruling: Berkeley was forced to remove three files out of 18,000 and make changes to several more. USL copyrights were also added to around seventy files, however, they were allowed to remain freely distributed.

Even with the ruling in Berkeley’s favor, it was too little, too late. Linux was on a meteoric rise and had already made its way from small, hobbyist project to commercial product, thanks in part to the hundreds of developers around the world working to improve it. And, there was no sign of a slowdown in sight.

Even more rapid progress on Linux started with the creation of another distribution by Marc Ewing in early 1994. Ewing named his creation Red Hat Linux, after a time he wore a red Cornell University lacrosse hat, given to him by his grandfather, while attending Carnegie Mellon University. He released the software in October of that year in what would become known as the “Halloween release”. In 1995, Bob Young, the owner of ACC Corporation (a business that sold Unix and Linux software and accessories) bought Ewing’s business and merged into Red Hat Software, of which Young became the CEO.

In early 1994. Berkeley released the updated source code for BSD, which became known as 4.4BSD-Encumbered due to the fact that it contained the USL source license. Alternatively, Berkeley also released a version known as 4.4BSD-Lite that removed or altered all files declared by the ruling to have USL copyright in them. The developers also made some significant changes to the system and decided that this release would become of the future of BSD. In 1995, the a last revision of BSD would be released by Berkeley, known as 4.4BSD-Lite Release 2, and would mark the end of official BSD development at Berkeley as the Computer Science Research Group was dissolved.

Though the development of BSD left its birthplace, many enthusiastic BSD contributors attempted to keep the BSD embers aflame by adopting the open source development model that Linux had greatly benefited from with the NetBSD and FreeBSD projects. In order to diversify, the two projects took different approaches in what they wished to accomplish. NetBSD was heavily invested in making sure that the operating system worked flawlessly on a wide range of computer architectures, while FreeBSD went the route of becoming the commercial flagship and Swiss Army knife of BSD.

Also in 1994, the community based Debian distribution began trying to solve the issue of software installation, removal, and updates. In order to accomplish this, Ian Murdock wrote a shell script called “dpkg”, which allowed users to easily work with their own deb package format (with the .deb extension). This allowed the Debian developers to specifically package software in their own repositories to ease the burden on the user of installing packages from source, as was done prior to dpkg. Eventually, dpkg would grow significantly more complex, causing it to be rewritten in the powerful Perl programming language instead of shell. This allowed for the expansion of the tool so that many more useful features could be added with relative ease.

By 1997, Red Hat Linux had grown into the largest enterprise Linux distribution in the world. Red Hat became the only Linux distribution that the proprietary Common Desktop Environment (CDE) had been ported to due to its massive success with enterprise solutions as well as desktop users. As aforementioned, the CDE was the result of collaboration between the major commercial UNIX players back in 1993. In order to make CDE the “standard” graphical environment for all UNIX System V offshoots, the group of corporations agreed to each write certain functionalities of the Motif environment and bring them all together into a single, cohesive package.

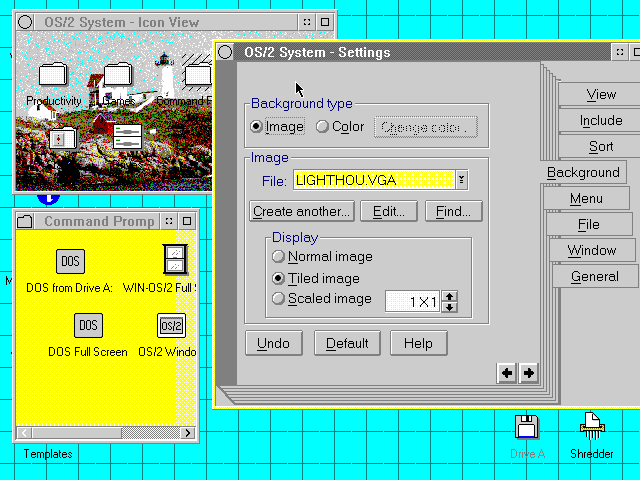

Consequently, USL provided the desktop manager components and scalable systems technologies from their UNIX System V, HP built the primary environment from their own Visual User Environment (VUE), which was already utilized in HP-UX, IBM contributed the Common User Access (CUA) model from their OS/2‘s Workplace Shell, and Sun Microsystems integrated its ToolTalk application interaction framework and a port of the DeskSet productivity tools from Solaris.

By gaining the port of a very reliable and popular (for the time) graphical user interface, Red Hat Linux began to explode even more in the enterprise sector and really set its sights on system administrators. However, a few hundred miles to the east, another Linux company was starting to make waves. S.u.S.E. had started truly seeing the vast opportunities available for Linux vendors in the enterprise sphere, partly due to the success of Red Hat.

By 1996, they had moved away from their Slackware-based distribution and decided to build a new Linux-based operating system on top of a little known distribution called Jurix, which was developed and maintained by an employee at the legal department of Saarland University, Florian La Roche. La Roche joined the S.u.S.E. team and began work on YaST (Yet another Setup Tool), which would become a central utility featuring the advanced installer and configuration software for their shiny new product.

Later that year, S.u.S.E. finally unveiled their distribution to the world as S.u.S.E. Linux 4.2, with an odd version number that referenced the popular Hitchhiker’s Guide to the Galaxy. YaST also saw its initial release as 0.42 in the same vein.

In order to humanize the Linux operating system, Linus and other developers decided to create a mascot (or “brand character”) for the operating system kernel. This was quite a different step than commercial UNIX systems took, which provided nothing but a very technically oriented logo. They wanted the mascot to be friendly, fun, and recognizable–a nod to the open source development that Linux utilized and the general friendliness and fun attributed to being a part of the open source community.

In 1996, Larry Ewing created the first iteration of the Linux mascot, which was chosen as a penguin at Linus’ request. The mascot adopted the nickname, “Tux” from a suggestion by James Hughes, who recommended it stand for “Torvalds’ Unix”. In addition, Tux was also a shortening of the word, tuxedo, which reflects the natural coloring of penguins. In true open source spirit, Tux was created using a free software graphics package known as GIMP (GNU Image Manipulation Project).

Tux was inspired by an image that Linus found on an FTP site of a penguin figurine, which was made in a similar style to the Creature Comforts characters that were created by Nick Park. For fun, Linus claimed that the original inspiration for using a penguin was due to his contracting “penguinitis” after being bitten by a penguin at the National Zoo & Aquarium in Canberra, Australia.

In 1997, Red Hat unveiled one of the most important features of their budding distribution–the Red Hat Package Manager (RPM). Though software package management wasn’t a new concept to Unix-like systems by any means, RPM actually improved upon the model by attempting to sort out dependency problems that come with installing software. By tracking the dependencies of specialized RPM packages (with the .rpm file extension), the open source package manager helped its users save a lot of time searching around for dependent software required by the packages they needed.

Though the first iterations were obviously not perfect, RPM was picked up by S.u.S.E. in 1997 and integrated into their own distribution. In fact, RPM became so popular that it was adopted by other operating systems like Novell’s NetWare, IBM’s AIX, and Oracle Linux. By this time, the advantages of using Linux for enterprise systems was becoming quite clear to many organizations. In only a few years, Linux started to take a shot at the Microsoft monopoly and as it’s development became more concrete. It really grew into the perfect operating system for enterprise servers.

Linux became a favorite of system administrators everywhere, especially those who had grown up with Unix. The free aspect of the operating system along with the relatively small price to pay for support compared to commercial licensing allowed companies like Red Hat and S.u.S.E. to grow into proper technology companies.

Though Red Hat and S.u.S.E. went the way of enterprise, another Linux distribution project, focused on free and open source software also began to rise in popularity amonst Linux hobbyists. Between 1993 and 1998, Debian grew into the mascot of a free and open source operating system. In fact, Debian was sponsored by the FSF for a whole year (1994-95), where it blew up in popularity and gained many contributors (over 200 by 1998).

Due to Debian’s commitment to the free software, it became the main operating system used by the growing free software movement throughout the 1990s. When Bruce Perens took over the project in 1996, he made some massive strides to put Debian out ahead of other distributions at the time. He led the conversion project from a.out to ELF and created a new Debian installer that could be run on a single floppy disc via the BusyBox program he created. Due to these massive improvements, Debian started to become known as one of the easier distributions to get up and working on a computer.

Another Debian-based initiative began in late 1998 that would attempt to see how far the project could go. Even though the GNU Hurd kernel wasn’t ready for primetime, many free software contributors and enthusiasts began trying to port Debian to the Hurd. The project became known as Debian GNU/Hurd.

On the other side of the world, Theo de Raadt, a core member working on NetBSD, was removed from the project after arguments with the rest of the core team. In 1995, Theo had his access rights taken away from the NetBSD source repository and went off to build his own. Theo’s fork of NetBSD 1.0 became known as OpenBSD and it didn’t take long until it caught on and made a huge splash in the BSD scene.

When Theo was contacted by a local security software company, Secure Networks (later acquired by McAfee), who were developing a network security tool for auditing codebases, OpenBSD shifted its ideaology to one of holding security at the highest level of importance, even over performance. Consequently, the OpenBSD developers re-implemented some key C standard library functions to be much more secure than their popular counterparts and took many other design directions to build as secure of a system as possible.

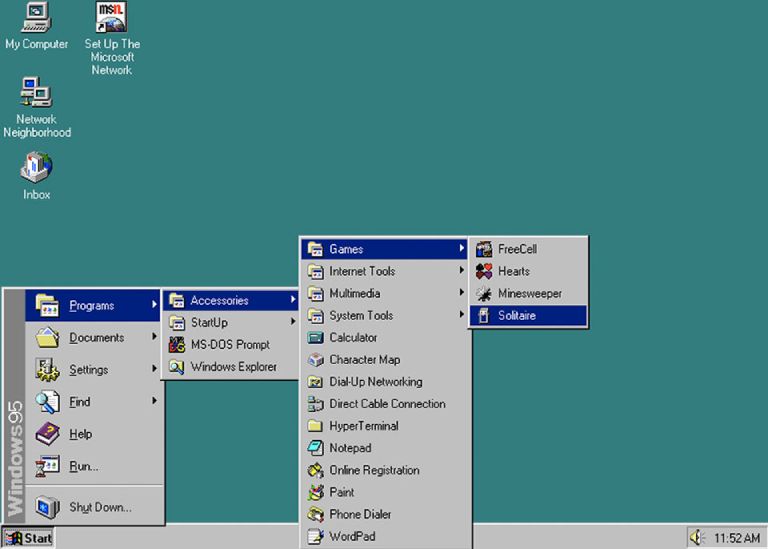

Though Linux began dominating the server market, Linux distributions rarely focused on the massive desktop computer market space, mostly due to the fact that there was no competitive graphical user interface that could stand up against the quality of Microsoft Windows. The truly revolutionary design of Windows 95 was a massive step in bringing the personal computer to a much wider audience than ever before. Of course, lower prices on hardware contributed heavily to the adoption of the personal computer, however, the intuitive and simple design of Windows 95 took the world by storm.

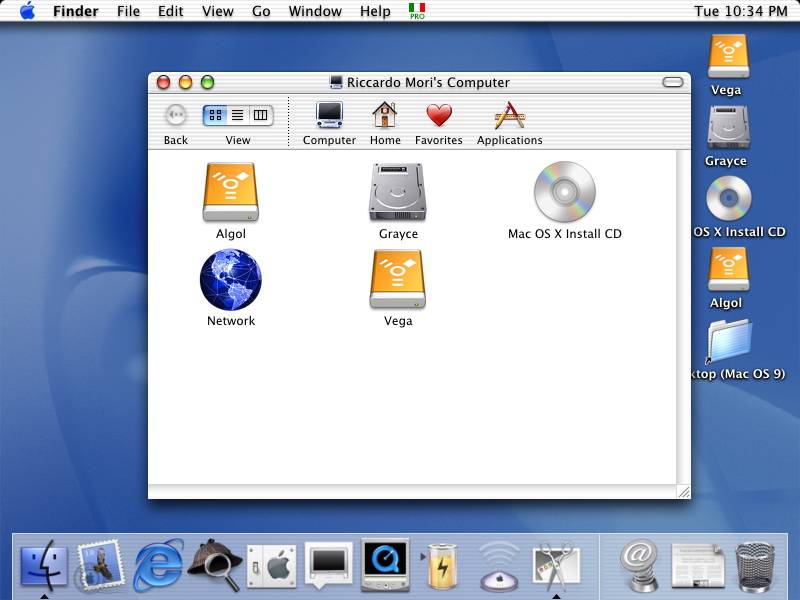

The other main competitors in the personal computing space were NeXTSTEP’s successor, OpenStep, which was developed by Steve Jobs’ NeXT Computers and Bill Joy‘s Sun Microsystems as well as the incredibly unique BeOS. However, in 1997, NeXT was acquired by Steve Job’s old company, Apple Computer Inc., and Jobs retained his position as CEO at the company he had previously founded. Consequently, OpenStep wouldn’t be scrapped at all. Instead, Apple made the decision to combine OpenStep with the old application layer of Mac OS, which yielded the user-friendly OS X operating system. With NeXTSTEP as the base, Mac OS X would grow into the most popular BSD system on the desktop computer.

One reason that Linux failed to penetrate the desktop market was that the kernel developers themselves remained focused on server usage and catered to that instead of the desktop. However, this would soon change with an initiative by Matthias Ettrich, a student at the University of Tübingen.

Ettrich was unhappy about the inconsistencies in applications for Unix-like systems. Unlike Microsoft Windows, the graphical applications on Unix systems did not look, feel, or function alike because they were largely developed by small teams of different developers. In response, Ettrich made a decision that would push Linux into the modern era. That decision–build a desktop environment and application ecosystem that followed a specific set of guidelines.

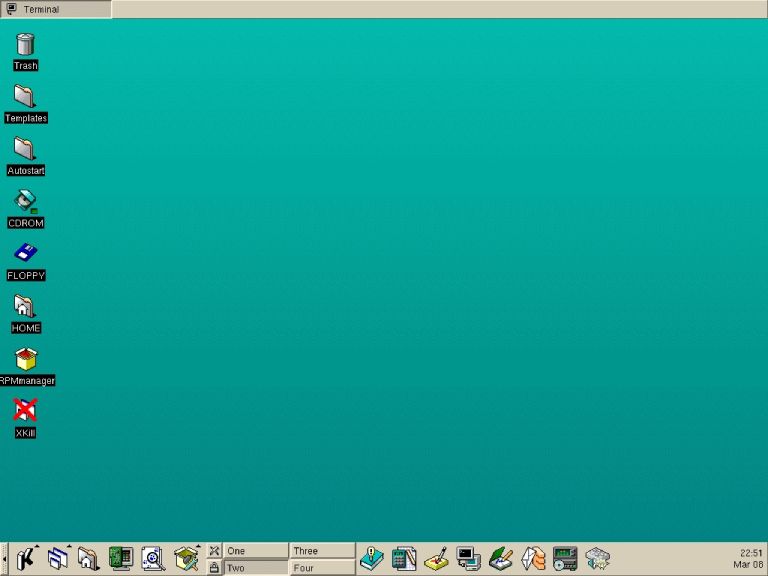

His project, KDE, was originally named the Kool Desktop Environment as a poke towards the inconsistent and sometimes awkward CDE, which ruled the graphical user interfaces on Unix systems. Though Ettrich started the KDE project in 1996, its ideas and implementation would open up Linux (and Unix as a whole) to an entirely different audience–the casual computer user–and would forever alter the trajectory of Linux.

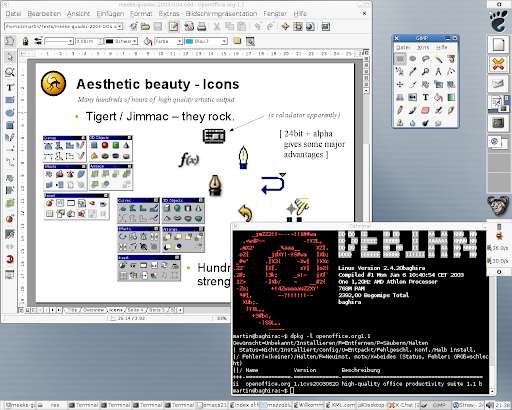

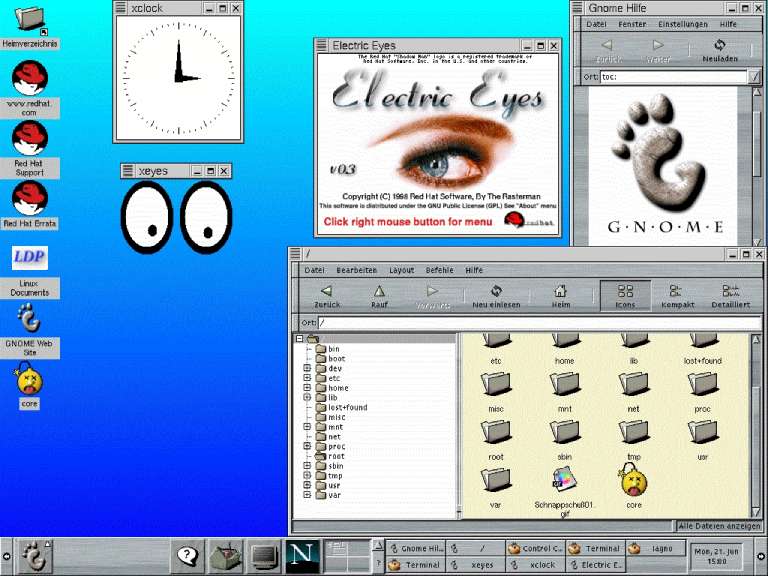

However, the KDE project used the proprietary Qt (pronounced “cute”) widget toolkit, which caused some members of the free software movement to look for alternatives. In 1997, Miguel De Icaza and Federico Mena chose to build their own desktop environment and application ecosystem using a completely open source widget toolkit, the GIMP Toolkit (GTK). At its founding, GNOME stood for the GNU Network Object Model Environment and used the GNU Lesser General Public License (LGPL), created by the FSF. Shortly after the release of GNOME 1.0, it was adopted by Red Hat and phased out the old CDE that it had been using prior.

In 1999, Red Hat went public. It was the defining moment of a decade long struggle that Linux faced to become recognized and respected in the larger software world. With Red Hat’s extremely successful IPO, it was clear that Linux was taking over and the train wouldn’t be losing steam anytime soon.

As the story of Unix moves into the 21st century, the myriad advancements in global communication truly started to make open source development an effective, efficient, and reliable strategy. The following two decades would be defined by a rapid surge in developer efficiency as well as friendly competition between different open source software projects to become the Linux standard. Though Linux gained enormous popularity throughout the 1990s, the next millennium would prove even more fruitful in a variety of different ways.

Modern Day Unix

In the shadow of the media hysteria surrounding the Y2K phenomenon (or “Millenium Bug”), the open source developers working on the Linux kernel kept chugging along. After Red Hat‘s extremely successful IPO in 1999, Linux had more than proved itself as an enterprise solution that could certainly replace legacy UNIX systems, bypassing the enormous cost of the commercial license in turn for a free and openly developed Unix-like operating system with a much more affordable support contract model.

However, many Linux enthusiasts at the time realized that Linux could be so much more than simply a operating system sitting on some server in a data center. With the leaps and bounds made on Linux development in the 1990s, many thought that Linux had the potential to take a shot at Microsoft‘s extremely popular and proprietary Windows operating system, which absolutely dominated the personal computer market space.

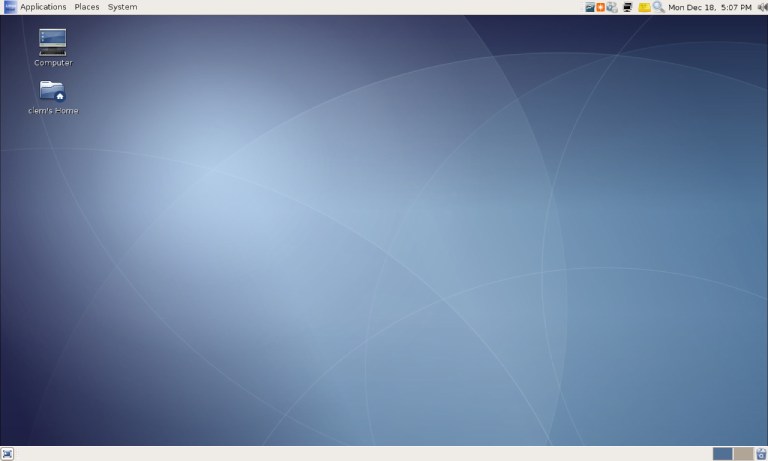

One such man, Gaël Duval, dreamed of turning this dream into reality. In July 1998, he took the most popular enterprise Linux distribution, Red Hat Linux, and merged it with the up-and-coming K Desktop Environment (KDE) to provide a much simpler and more intuitive graphical user interface (GUI) for those who were familiar with the popular Windows 95-style GUI. The initial release, Linux-Mandrake 5.1, was based on Red Hat Linux 5.1 and followed the versioning scheme of it’s parent.

The response to the release of Mandrake was enormous, much greater than Duval had ever expected. Duval released Mandrake on a few FTP servers and announced it on a few Linux news websites before going on a two week vacation. When he returned, he found his inbox flooded with nearly 200 emails regarding his new project. Within a few months of the initial release, Duval, along with some developers that he had met via Mandrake’s success formed their own company, MandrakeSoft (a shot at Microsoft) in order to sell some CDs with Mandrake to pay for the development costs.

Besides the inclusion of KDE as the main desktop environment, Mandrake also included other tools for user-friendliness including the urpmi (RPMDrake) package manager, based on Red Hat’s RPM with the intent of addressing some of RPM’s limitations at the time, the MandrakeUpdate tool to enable updates through the user interface, and a graphical installer for the distribution. All of these features combined to make Mandrake the easiest distribution to install, use, and update over the multitude of other budding Linux distributions.

In a similar vein, the now-massive Debian introduced a much higher level package management tool on top of dpkg in 1999, called the Advanced Packaging Tool (APT) in order to compete with RPM. Though dpkg still worked with all of the individual DEB files, APT helped to manage dependencies between them as well as release tracking and version pinning. One of the greatest features implemented in the APT tool was that it performed topological sorting of all packages passed to it to ensure that they would arrive in the most efficient order for dpkg.

In 2000, Apple Inc., after purchasing the OPENSTEP for Mach operating system along with Steve Jobs‘ NeXT computers released details of a new operating system kernel that they would be using in the upcoming Mac OS X 10.0 release, codenamed “Cheetah”. The XNU kernel became the hybrid kernel that was at the heart of this new operating system, known as Darwin. Attempting to take advantage of the best features of both the microkernel and monolithic kernel design, XNU utilized an implementation of the Open Software Foundation‘s Mach kernel (based on the original designs from Carnegie Mellon in 1985). Additionally, Apple merged the OpenStep API that NeXT had built with Sun Microsystems with their old Mac OS interface to build the Aqua user interface, which would eventually become the Cocoa API.

In order to create a Unix-like operating system, Apple engineers began using code from various BSDs, especially the FreeBSD project. This part of the operating system provides the POSIX application programming interface along with many other Unix-like functionalities. Though Apple has used quite a bit of code from FreeBSD, it is obviously heavily modified for the XNU architecture, which is incompatible with FreeBSD’s monolithic design. The release of Mac OS X was the first step that Steve Jobs planned in order to bring his old company back from the grave.

In early 2001, Judd Vinet was inspired by the CRUX distribution to create a minimalist version of Linux in order to give greater control to the user over what software they wanted to install on their system. The project became known as Arch Linux due to Vinet’s enjoyment of the word’s meaning of “the principal” as in “arch-enemy”. Vinet was enamored with the simplicity of certain distributions like Slackware, PLD Linux, and CRUX, but saw them as unusable due to their lack of a strong package management solution that could compare to Red Hat’s RPM or Debian’s APT. In response, Vinet wrote his own package manager, pacman, to handle package installation, removal, and upgrades smoothly. Arch Linux grew slowly at first, but more and more Linux users began to see the simplicity of the distribution as well as the incredible amount of control available.

Besides the idea of a minimal installation and rolling release updates, the Arch Linux developers became frustrated with the current state of documentation around Linux and open-source projects in general. To change this, they created the ArchWiki, which would become one of the most comprehensive documentation projects in Linux history. The idea partially grew from the fact that Arch Linux was more difficult to install than many other distributions because it forced the user to configure everything about their system–from networking to desktop environments–which made it difficult for people without extensive experience in Linux to get started. The Arch Linux developers wanted a place that anyone who was willing to put in the time to learn could benefit from the major advantages that Arch Linux provided.

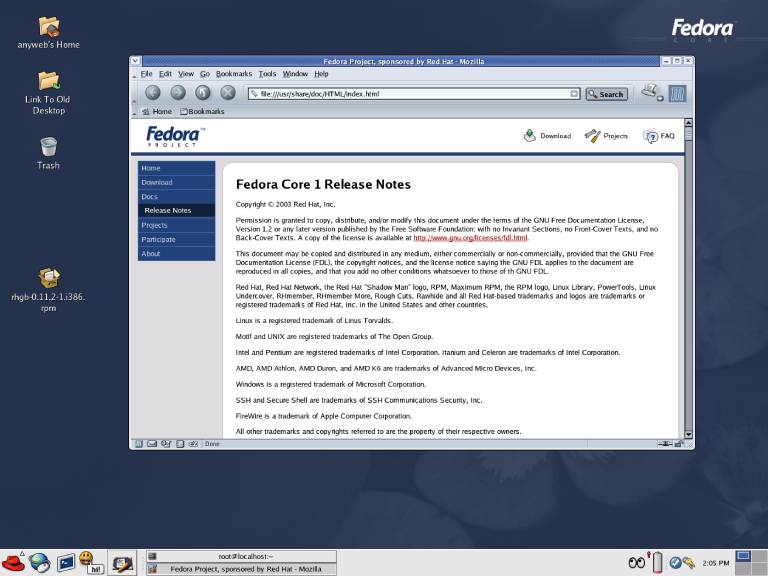

In November 2002, University of Hawaii undergraduate student, Warren Togami, began a volunteer and community driven project called Fedora Linux, which provided some extra software bundled with Red Hat‘s extremely popular Red Hat Linux distribution. The name, Fedora, was derived from the hat used in Red Hat’s “Shadowman” logo. The goal of Fedora was to provide a single repository for all of the vetted third-party software packages in order to find and improve non-Red Hat software. Unlike the development of Red Hat Linux, Fedora was intended to be run as a collaborative project within the Red Hat community, governed by a steering council of members from all around the world.

At the same time that Fedora was launched, Red Hat Linux was discontinued in favor of the company’s new offering, Red Hat Enterprise Linux (RHEL). Red Hat took interest in the Fedora Project early on and began supporting it as a community run distribution for desktop users. RHEL became the only officially supported Linux distribution by Red Hat, though Fedora began to spread and soon become widely used throughout the company. As Fedora began to mature, Red Hat used it as a sort of “testing grounds” or “incubator” for updated packages before they made their way into the stable and much slower moving RHEL.

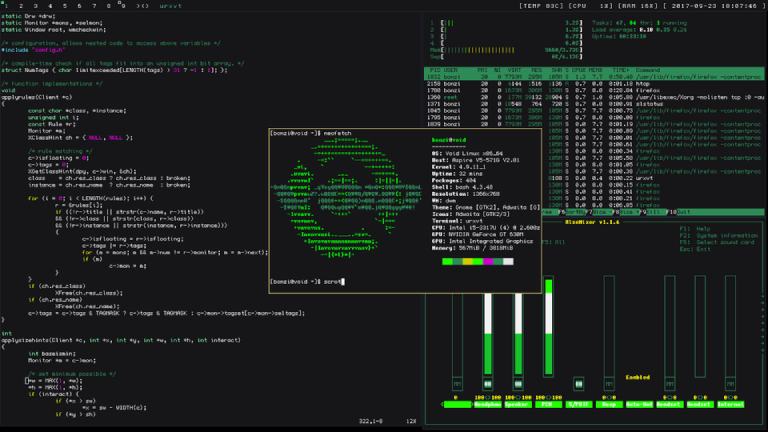

In 2003, the largest overhaul of the development system of the Linux kernel in the entirety of its history would happen with version 2.6. The development of Linux kernel 2.6 spanned nearly 8 years–ending in 2011–and introduced a massive amount of updates including 64-bit support, an improved scheduler for multi-core systems, increased modularity of components, and much more. As a consequence of the extremely long development period, the Linux kernel release period would transform into what it is today. Due to the massive architectural and tooling changes, the Linux kernel is split into two major periods of development–those prior to and including 2.6 and the releases after, with the first being version 3.0.

Also in 2003, Sun Microsystems would release details about its next generation 3D user interface, known as Project Looking Glass. The environment was originally built to replace the Java environment in Solaris. Looking Glass was an attempt to make a futuristic desktop environment that would be compatible with most Unix-like systems and even Microsoft Windows. However, the project never made it to fruition and the codebase was open-sourced for others to continue work on it. It would go on to greatly influence the look and feel of Apple’s Mac OS X, especially with the major user interface revamp that came with version 10.5 “Leopard”.

In November 2003, SuSE (changed from S.u.S.E.) was acquired by Novell, a company that already had their hands in the commercial UNIX ecosystem with their NetWare and UnixWare operating systems. Earlier that year, the company had acquired the GNOME Project from Eazel (creators of the Nautilus file manager) and SUSE Linux’s default desktop environment was switched to GNOME from KDE. The result was that the second iteration of GNOME would become extremely successful, with engineers from Red Hat and SUSE working together on the desktop environment and application ecosystem along with an extremely fast-growing community of talented developers from all around the world.

After many years of being the main BSD implementations in use, FreeBSD and NetBSD finally received some friendly competition in July 2004 with the release of a FreeBSD 4.8 fork by Matthew Dillion, which he named DragonFly BSD. Dillon was an Amiga developer from the late 1980s to the early 1990s and then joined the FreeBSD team in 1994. In 2003, Dillion had major disagreements with how the FreeBSD developers proposed implementing threading and symmetric multiprocessing and thought their techniques would cause massive maintenance problems as well as a significant performance hit.

So, when Dillion’s major disagreements with the FreeBSD development team escalated, they revoked his privilege to contribute directly to the codebase, causing him to announce the start of his forked project, DragonFly BSD, on the FreeBSD mailing lists. Though Dillion remains friendly with the FreeBSD developers, DragonFly BSD went in a completely different direction with components like lightweight kernel threads, an in-kernel message passing system, and DragonFly BSD’s own file system known as HAMMER.

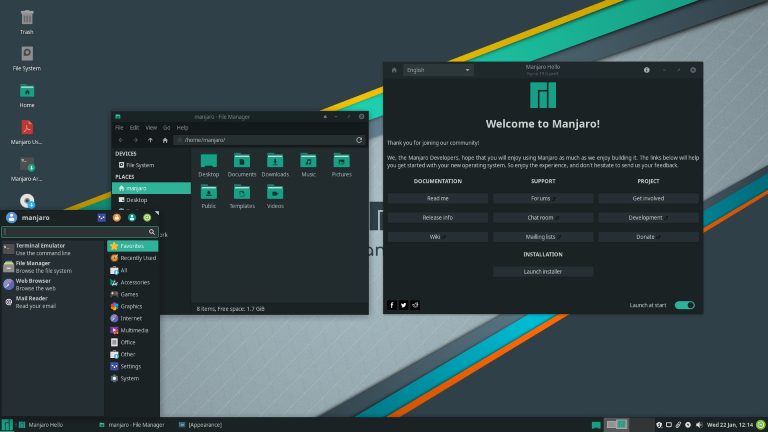

In late 2004, a new distribution would make its way into the Linux community and would completely alter the desktop Linux experience forever. It began with the sale of an internet security company, Thawte, to Verisign for an undisclosed price, making the South African founder, Mark Shuttleworth, a multi-millionaire over night. Instead of retiring and living out a life of luxury, Shuttleworth decided to use his fortune in order to change the world.

Shuttleworth, a free and open source software enthusiast and Debian contributor, understood the importance of Linux as an open operating system, which had the potential to run the world. In April 2004, Shuttleworth brought together a group of some of the most talented Linux developers from all around the world to see if they could put together the ideal operating system based on Linux.

Being a huge fan of the Debian Project, the team chose to use Debian as a stable base to build upon. The ideas that these developers came up with for transforming Debian into an easy to use and comprehend operating system include this now-famous list:

- Predictable and frequent release cycles.

- A strong focus on localization and accessibility.

- A strong focus on ease of use and user-friendliness on the desktop.

- A strong focus on Python as the single programming language through which the entire system could be built and expanded.

- A community-driven approach that worked with existing free software projects and a method by which groups could give back as they went along, not just at the time of release.

- A new set of tools designed around the process of building distributions that allowed developers to work within an ecosystem of different projects and that allowed users to give back in whatever way they could.

Though these might seem like the bare minimum for popular Linux distributions today, at the time it was revolutionary. Even a distribution like Debian, which was considered one of the easier distributions to install and manage, was far out of reach for people who didn’t have a technical background or interest. Shuttleworth planned to change that.

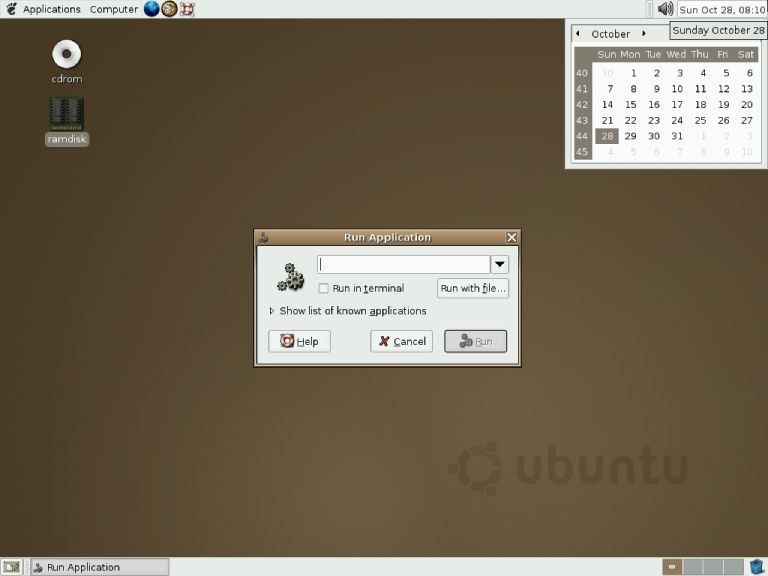

The group became known as the “Warthogs” and were given a six month period where they would be fully funded by Shuttleworth to come up with a working prototype. In October of 2004, Shuttleworth and the Warthogs unveiled their project to the world as “Ubuntu“, a South African term that roughly translates to “humanity” in English, reflecting the best pieces of human beings like compassion, love, and community.

The first version, Ubuntu 4.10, was given the name “Warty Warthog”, as the team figured it would be riddled with bugs in the first release. Even without a single piece of promotion by Shuttleworth and the developers before its release, Ubuntu shot up the list of popularity in the Linux world almost overnight due to the ease of installation and use.

In the wake of Ubuntu’s massive success, Shuttleworth created a company, Canonical Ltd, in order to fund the project and those first Warthog developers became the original members of the Ubuntu core team. Though Canonical decided on the GNOME 2 desktop environment as their default interface, another community project would port KDE to the Ubuntu base. This project, started by Canonical employee Jonathan Richell, came to be named Kubuntu. Kubuntu would serve as a flagship offering of KDE for several years.

After seeing the success garnered by community run projects like Fedora and Ubuntu/Kubuntu, SUSE decided to open up development on their enterprise offering by introducing the SUSE Linux distribution with their 10.0 release of the enterprise offering, which would eventually be renamed and rebranded as the openSUSE Project. Similar to Fedora, openSUSE became a community run project with an elected steering council that oversaw the project’s direction. Though supported by SUSE, openSUSE was not considered an official product of the company.

One of the glaring differences between the Red Hat and SUSE approach to their desktop distributions compared to Canonical was that Red Hat and SUSE effectively cut themselves off from these projects, citing community as the source. On the other hand, though Ubuntu was also branded as a community project and took contributions from developers outside of Canonical, the company still had the absolute say over what direction the distribution took, which would eventually became a source of contention within the growing desktop Linux community.

In 2005, Sun Microsystems, the company behind the most prolific commercial UNIX system left alive in industry, Solaris, made a massive announcement. Though Solaris had been a proprietary and closed-source operating system since its replacement of SunOS in 1993, the company was beginning to see the benefits of the open-source model that Linux was utilizing and decided to release most of the codebase under the Common Development & Distribution License (CDDL) license as OpenSolaris.

After seeing the massive progress for Linux on the deskop and feeling as though the open-source operating system was finally ready for prime time, two friends in Denver, CO decided to pursue a company dedicated to building hardware with Linux pre-installed. At the time, there was nobody (or very few, relatively unknown vendors) shipping personal computers with Linux, so most users had to install the operating system itself, which added to poor adoption by the general public. After dismissing Red Hat, Fedora, openSUSE, Yoper and other popular distributions at the time, the company settled on Ubuntu due to its rapid growth, ease of use, and the nature of Canonical’s business model, which co-founders Carl Richell and Erik Fetzer appreciated.

The company became known as System76 as an allusion to the year 1776, when the United States gained its independence from Great Britain via the American Revolutionary War. The founders of System76 hoped that they could play a part in igniting another revolution–one of free and open-source software. They dreamed of a day when people could use their devices freely without the restrictions that apply to proprietary software, hardware, and firmware. The first System76 computers to ship ran Ubuntu 5.10 “Breezy Badger”.

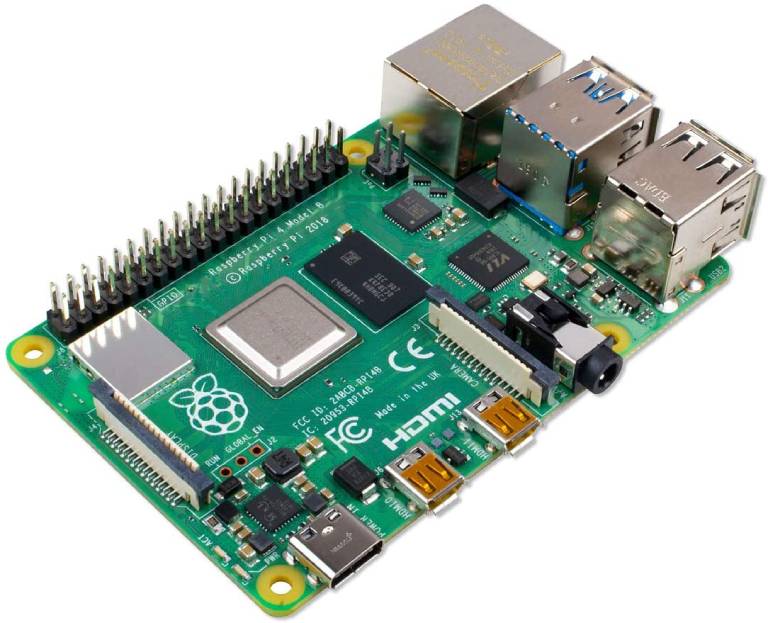

On October 24, 2005, Andrew Tanenbaum announced his next iteration of MINIX, version 3.0. Though massively overshadowed by the open-source operating system that was inspired by it, MINIX 3 was not only designed as a research and learning operating system to accompany Tanenbaum’s textbook, Operating System Design and Implementation, it also was pushing to become a serious and usable system for the burgeoning embedded systems area, where high reliability was paramount. To that end, MINIX was designed to support smaller chipsets like i386 and the ARM architecture.

MINIX 3.1.5 brought in a massive amount of Unix utility programs by supporting a wide range of software found on most Unix systems like X11, emacs, vi, cc, gcc, perl, python, ash, bash, zsh, ftp, ssh, telnet, pine, and some several hundred more. The userland of version 3.2 was replaced mostly by that of NetBSD, making support for pkgsrc possible and increasing the amount of available software applications that could be utilized on MINIX. As the microkernel operating system gained more attention, Intel began using it internally as the software component making up the Intel Management Engine by 2015. MINIX 3 is still used in niche situations all over the world.

While Canonical continued to improve the user experience for desktop Linux enthusiasts, they found one area in particular to be nightmarish–the SysVinit init system, which was a collection of UNIX System V init programs from the 1980s that were ported to Linux by Miquel van Smoorenburg. Though there were other init systems available at the time, they hadn’t quite reached maturity on Linux and this was causing major problems for Ubuntu on a wide range of hardware.

In order to clean up the problem for Linux, Scott James Remnant, a Canonical employee at the time, began writing a new init system, called Upstart, which was released to the world on August 24, 2006. One of the main draws to Upstart was the fact that it was backward compatible with SysVinit, which meant that it could run unmodified SysVinit scripts, allowing for rapid integration into current system architectures. Of course, Ubuntu was the first Linux distribution to adopt Upstart by including it as the default init system in Ubuntu 6.10 “Edgy Eft”, however, many other popular distributions eventually followed suit including Red Hat with RHEL 6 and Fedora, as well as SUSE’s enterprise solutions and openSUSE.

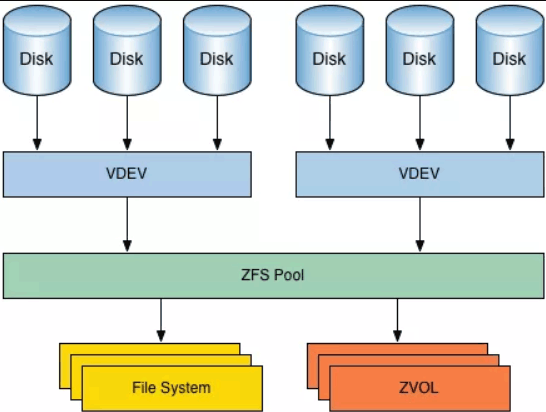

In 2006, another massive innovation would come out of Sun Microsystems. In 2001, Jeff Bonwick and his team at Sun began work on an area that hadn’t seen a whole lot of creative innovation since Unix rose to maturity in the 1980s–the file system. Announced in September 2004, the Z File System (ZFS), named originally as “Zettabyte File System”, looked extremely promising and pushed the boundary for a “next generation” of file system quality. A ton of innovative and extremely useful functionalities were built into ZFS and it was even able to store approximately 256 quadrillion zettabytes of data (much more data than anyone had seen at the time).

In time, the major BSDs would pick up support for ZFS and would even make it the default file system for their operating system. However, this would be done under the OpenZFS platform, which encouraged open-source variations on the specific version of ZFS that had been released with OpenSolaris. Unfortunately, due to the CDDL licensing of ZFS, Linux found itself to be incompatible with the file system, and attempts to integrate ZFS into Linux were largely ignored by the Linux kernel developers.

Though ZFS was found to be incompatible with the GPL v2 license that the Linux kernel keld, another next generation filesystem would soon become a reality, and this time, it was completely open-source and built specifically for Linux. At USENIX 2007, SUSE developer Chris Mason, would learn about an interesting data structure proposed by IBM researcher, Ohad Rodeh, called the copy-on-write B-tree. Later that year, Mason joined Oracle and began working on a new file system that would utilize this data structure at its core. Mason named his project the B-tree file system (Btrfs).

At the time, the ext4 filesystem had come to dominate the Linux landscape, however, even ext4’s principal developer, Theodore Ts’o, stated that his own file system had gained little in the way of innovation and that Btrfs would be a much more interesting direction to explore. Btrfs included many of the popular functionalities of ZFS, such as system snapshots, self-healing properties, only saving diffs between snapshots to save space, and even some functionalities that weren’t present in ZFS. The growth of Btrfs was slow at first, but today it is used by major companies like Facebook and SUSE. There is even an initiative today by the HPC community to use Btrfs as a backend for the massively parallel file system that is the de facto implementation on supercomputers today, Lustre–an honor that has long been reserved specifically for ext4 or ZFS.

Though RHEL was available only through a paid subscription model, the project’s source code was available to the public. Because of this, a few RHEL clones would pop up to give the same capabilities of RHEL, without the need for payment, however, they did not include the official support that Red Hat provided. Two of the major competing RHEL clones were Gregory Kurtzer’s CAOS Linux and David Parsley’s Tao Linux.

In 2006, the two distributions decided to come together to work on the common goal of providing a free version of RHEL and would eventually rebrand the distribution as The CentOS Project. By 2009, CentOS had gained enough support from the developer community that it overtook Debian as the most popular distribution for web servers, however, it wouldn’t last for long (Debian regained the title in January 2014). CentOS became an extremely popular distribution for developers because it allowed them to use an operating system with all the perks of RHEL, without the need to pay for support contracts, especially for smaller projects like web hosting that didn’t require a ton of support from any company. The CentOS community stepped up and began helping those who chose the distribution through their own mailing lists, web forums and chat rooms.

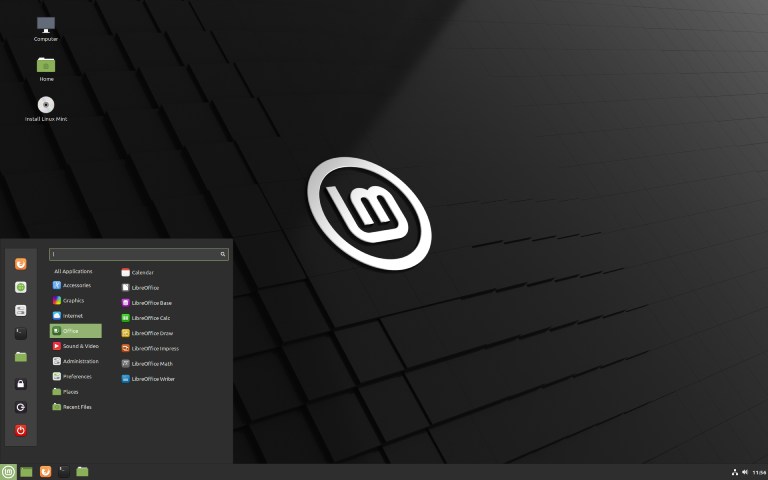

Like its parent distribution, Debian, Ubuntu started to become a major source and stable base for other software developers to build their own distributions on top of. One of the first Ubuntu derivatives was created in August 2006 by Clément Lefèbvre (known as “Clem”), named Linux Mint. The developers who started Mint weren’t too keen on some of the decisions that Canonical made and were also afraid that if the company went under, the advancements made in Ubuntu would be lost along with it. Though their very first beta-quality release, codenamed “Ada”, was based on Kubuntu, every release of Mint from version 2.0 on would use Ubuntu as its official base.

Part of the goal of Linux Mint was to create a better version of Ubuntu than Canonical. Because they were not bogged down in a lot of the lower-level details, Mint was able to build and package a bunch of extremely helpful tools designed by its own developers. These tools were built with the singular goal of making Linux as easy to use as Microsoft’s Window, even for users who were not technically inclined–a trend that would only grow with the rise of many Ubuntu-based distributions throughout the next 15 years.

However, not everyone was looking to build off of Ubuntu, Debian, Red Hat, or Arch Linux at the time. Instead some developers had other ideas for building their own distributions from the ground up. In 2008, a former NetBSD developer, Juan Romero Pardines, was looking for a system to test his new X Binary Package System (XBPS). He decided to experiment with a Linux distribution for this purpose as well as testing out other software from the BSD world for compatibility with Linux.

His creation, dubbed Void Linux, has since deviated from the norm on many aspects including using a smaller init system, runit, in place of SysVinit (and later, systemd), implementing LibreSSL in place of OpenSSL by default, and building install media that gives the choice of C standard library implementation between the GNU Project’s popular glibc or the newer and less-known musl libc. Though Void Linux started out slowly (in a similar vein to Arch Linux), it has grown impressively, especially in the last few years, in part due to its deviation from the norm as well as adoption of the rolling release model.

By 2008, the X Windowing System (X11) was starting to show its age as Linux moved into the modern era. While traveling through Wayland, Massachusetts, Kristian Høgsberg, an X.Org developer and Red Hat employee, had a finalized vision of what a modern X display server would look like and began working on the Wayland display server protocol in his spare time at Red Hat. It would take some time before Wayland would become a major open-source project.

However, unlike the X.Org project, Wayland was simply a display protocol that did not include a compositor. This meant that desktop environments would have to implement compatibility with Wayland themselves. An experimental implementation of a Wayland compositor by the Wayland developers, called Weston, was released, but was more of a proof of concept as well as a reference for others attempting to build Wayland compositors. For that reason, Weston is still not recommended for use on production systems.

A few years later, Canonical would announce their own display server for their Unity8 desktop environment named Mir. The projects diverged significantly over the years and eventually Wayland was chosen by the vast majority of Linux ecosystem developers as the de-facto display server protocol to replace X11. However, with all the work put in to Mir, Canonical didn’t want to scrap the project for no reason. Instead, Mir was re-implemented as a Wayland compositor that is still in active development today.

At the same time that Wayland was moving from idea to implementation, another major event would push Linux into another breakthrough arena that would come to dominate human life–the smartphone. Android Inc. was founded in the heart of Silicon Valley in 2003 with the idea of creating a smarter operating system that could be “more aware of its owner’s location and preferences”. Early on, the startup focused on digital cameras before realizing that a significant market wasn’t there. A year later, they had pivoted to a different idea–one that was being explored by other major tech companies like Microsoft and Apple.

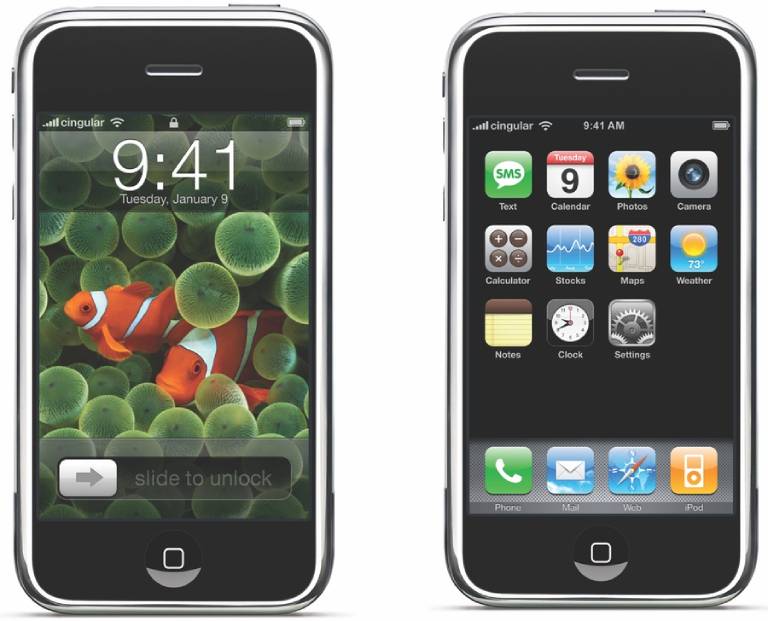

In 2005, Android was acquired by Google, which brought a lot of attention to the litte-known company. Their first prototype of a mobile device resembled a BlackBerry phone, with an operating system built upon the Linux kernel. However, when Apple released their iPhone in 2007, it was clear that Android would once again have to pivot if they wanted to compete with iPhone OS (now iOS), Apple’s mobile operating system built on top of their BSD-flavored Darwin kernel (the same kernel as Mac OS X at the time).

After a year of extremely hard work, the Android developers released their mobile operating system of the same name to the public on September 23, 2008. The project became an immediate success and was able to penetrate the mobile market due to its much lower cost than Apple’s devices as well as a much more configurable interface. By 2013, Android was the most popular mobile operating system in the world, and that growth wouldn’t stop there. As of today, Android is utilized by over 2 billion people worldwide, making it the most popular operating system in existence.

In 2010, one of the largest acquisitions in American technology history took place with the acquisition of Sun Microsystems by Oracle Corporation. Oracle, a company known for its proprietary database products, wasn’t fond of open-source software as they did not see the economic incentive behind it. So, as Oracle took ownership of Sun’s IP, they began shutting the doors on the major open-source projects that Sun had backed, like OpenSolaris and ZFS, which would influence many developers to attempt to continue with the last available open versions of the operating system and next generation file system.

This move resulted in the founding of the illumos project and subsequently, the non-profit Illumos Foundation. Announced in August 2010 by some core Solaris engineers, the illumos project’s mission was to pick up where OpenSolaris had left off by providing open-source implementations of the remaining closed-source code that was part of OpenSolaris. The illumos developers quickly moved to implement parts of the GNU codebase to fill in the missing pieces of code like libc with glibc and the OpenSolaris compiler collection, Studio, with GCC.

The most well known free and open source variant of OpenSolaris was developed by the Illumos Foundation and released in September 2010. It was named OpenIndiana in respect to the Project Indiana initiative that Sun Microsystems was building to construct a binary distribution around the OpenSolaris codebase. Project Indiana was originally led by Ian Murdock, the founder of the Debian Linux distribution.

In 2010, it seemed that Linux was on top of the world–absolutely dominating in the mobile space, Ubuntu was becoming about as close to “mainstream” as desktop Linux ever had, and all sorts of new innovation was happening around the operating system and the extended Unix-like ecosystem that it depended on. However, a massive rift was about to be felt throughout the entire desktop Linux enthusiast community, one that nobody could have foreseen.

In 2010, the GNOME developers announced the release of the next iteration of their extremely popular desktop environment. Their current GNOME 2 desktop was so universally accepted as the default desktop environment at the time, that Red Hat, SUSE, and Canonical all were united behind the development. However, when the design plans and beta tests of GNOME 3 started making the rounds, people lost their minds.

Though GNOME 2 followed the traditional desktop paradigm laid out by Windows 95 fifteen years prior, GNOME 3 was a complete redesign from top to bottom. Upon boot, a single bar at the top of the screen showed an “Activities” button on the left, date and time in the center, and a single drop down menu on the right that included all information usually found in a system tray. The rest of the screen was filled up by a wallpaper that included no folders or icons on it. Many people were upset as the GNOME developers stripped out most of the customization that was available in GNOME 2.

Besides the drastic stylistic departure, the first iterations of GNOME 3 were extremely buggy, slow, and resource heavy from the beginning. Many long-time Linux users, including Linus himself, moved to KDE or other desktop environments instead. Canonical, after having disagreements with the GNOME developers, decided to ditch the interface they had used for the past six years and began working on their Unity shell for Ubuntu’s future. Unity was built on top of the GNOME Shell and wasn’t exactly perfect on its trial runs either.

However, unlike GNOME 3, Unity made progress and became quite an enjoyable interface by the time Ubuntu 12.04 came around. GNOME 3 still struggled with the problems it had and, with many users and distributions leaving their ecosystem, it became more difficult to pull in talented developers.

In fact, a group of GNOME 2 fans and developers gathered together under the leadership of an Argentinian developer in August 2011 to fork their favorite desktop environment so that it could live on past its end of life. The new fork of GNOME 2 became known as MATE, a direct reference to the South American plant, yerba mate, which is used in an extremely popular drink. However, instead of going into maintenance mode, the MATE developers began porting the desktop environment to support the newer GTK 3 toolkit so that they could continue to receive the latest applications.

Besides the rise of MATE, another developer community was very unhappy with the direction that GNOME was headed. Linux Mint began actively developing a set of GNOME Shell extensions that made the desktop look and work in a more traditional manner. Eventually those extensions blossomed into a completely separate GTK 3-based desktop environment that the Mint team named Cinnamon. Both MATE and Cinnamon have gone on to become full-fledged and popular desktop environments in the greater Linux community (and beyond in some cases).