Hello and welcome to the twenty-third edition of Linux++, a weekly dive into the major topics, events, and headlines throughout the Linux world. This issue covers the time period starting Tuesday, August 11, 2020 and ending Thursday, September 10, 2020.

This is not meant to be a deep dive into the different topics, but more like a curated selection of what I find most interesting each week with links provided to delve into the material as much as your heart desires.

If you missed the last report, Issue 22 from August 10, 2020, you can find it here. You can also find all of the issues posted on the official Linux++ Twitter account here or follow the Linux++ publication on the Destination Linux Network’s Front Page Linux platform here.

In addition, there is a Telegram group dedicated to the readers and anyone else interested in discussion about the newest updates in the GNU/Linux world available to join here.

For those that would like to get in contact with me regarding news, interview opportunities, or just to say hello, you can send an email to linuxplusplus@protonmail.com. I would definitely love to chat!

There is a lot to cover so let’s dive right in!

Community News

Linux Kernel 5.8 Released

If you were to ask any knowledgeable Linux enthusiast what the most impactful kernel version was, many would reply with favorites like 2.6 or 4.2 (and they could be right in their own way!). Even though both of those releases were massive (with 2.6 taking several years to complete), there is a new largest kernel release in town…and it’s known as version 5.8.

With a much shorter release cycle for kernel development today compared to years past, it was definitely surprising to hear that the latest version of our favorite open-source operating system was the largest in its history! Even with all the hype, Linux kernel 5.8 isn’t necessarily the most groundbreaking release ever, but it sure adds a lot of nice features, especially if you’re running newer hardware or are looking at upgrading sometime in the near future.

One of the most preeminent features of 5.8 is the work that has been done on behavior during “memory thrashing” encounters. Memory thrashing happens when the resources allotted to virtual memory are overused, which results in a consistent state of paging and page faults. Consequently, applications in the user space area of the OS become unusable (or “frozen”).

In response, the kernel team decided to take a hard look at Linux’s IO model and was able to implement a more aggressive algorithm to force the kernel to scan random memory during a thrashing episode. In addition, the LRU balance and IO cost between the swap device and file system was altered slightly, effectively optimizing the reclaim for the lowest amount of IO cost possible. In English? You should see noticeable results in performance in high memory situations (like all those browser tabs you have open! ;)).

Another major inclusion into kernel 5.8 is that of the Kernel Concurrency Sanitizer (KCSAN). From the official website:

“The Kernel Concurrency Sanitizer (KCSAN) is a dynamic race detector, which relies on compile-time instrumentation, and uses a watchpoint-based sampling approach to detect data races. KCSAN is supported by both GCC and CLANG. With GCC we require version 11 or later, and with Clang also require version 11 or later.“

This is a very interesting and welcome addition to the kernel in my opinion. For those unaware, data races are one of the nastiest memory issues that affect modern software. This occurs in highly-concurrent systems when two different threads attempt to access the same memory locations at the same time and at least one is attempting to write to said location.

Consequently, some very strange, undefined behavior, or even full-blown memory corruption, can occur. Data races have been one of the most pressing issues faced by C programmers working on concurrent systems (like the Linux kernel), so you can never have enough safeguards in place to attempt to circumvent their overall effect.

Though these two additions stand out in kernel 5.8, there are plenty of other goodies packed into the massive release including a new kernel event notification mechanism built on pipes, private procfs instances, the ability to use pidfds to attach namespaces, improved security for the ARM64 architecture, support for Inline Encryption hardware in the kernel’s block layer, support for Multi-Protocol Label Switching to IPv6, and many Btrfs performance and reliability improvements.

In addition, as with all new kernel releases, 5.8 introduces support for a number of newer hardware interfaces including a new AMD energy driver for Zen and Zen2, improved support for AMD Renoir CPUs and ACP drivers, trusted memory zone support for AMDGPU, improvements to the exFAT driver, Thunderbolt ARM support, and new drivers for the Intel Atom camera.

All in all, I’d say that this was definitely a very productive and impressive kernel release, even though most are already looking ahead to version 5.9 (as always ;)). I want to give a huge shout out to all the people working hard to improve the Linux kernel everyday. It’s not easy work and we salute you for everything you do!

There is a ton of additional changes with kernel 5.8, so make sure to check out this great run down for those newer to kernel development here.

Unfortunate News Out of Mozilla

Some astonishing news came out of Mozilla this past month regarding the future of the company–and many are upset with the direction. An official announcement blog post, titled “Changing World, Changing Mozilla“, from Mozilla CEO, Mitchell Baker, was released to the public, which stated that Mozilla would be laying off nearly 250 employees in the coming days. This is a massive hit considering that it approximately equates to nearly 25% of the entire company.

Though the extremely sad post didn’t specify which teams were being reduced or completely eviscerated, many of the laid off employees have resorted to looking for new opportunities through Twitter, using the hashtag #mozillalifeboat. Those who have been paying attention to the different teams that laid off employees have discerned that many important teams have seen cuts including, Firefox devtools, Firefox incident/threat management, Servo, MDN, WebXR/Firefox Reality, and DevRel/Community.

Looking at the above teams, it is easy to see that our favorite privacy and security respecting, open-source browser, Firefox, might be in major trouble, especially with Google doubling down on its Chrome team and Microsoft Edge being shoved down every Windows user’s throat. Firefox is one of the last major bastions of the open web and its removal would certainly mean that access to the Internet will be heavily controlled by Google and Microsoft going forward.

Firefox is different from most popular browsers on the market in that it uses its own parallel, high-performance browser engine, known affectionately as Servo. This is in comparison to Google’s open-source browser engine, Chromium, which makes up the backbone of a large majority of browsers, including Chrome and Edge.

I just want to give a huge shout out to all those who helped make Mozilla the company that it is (or was?). Your work on Firefox and open-source projects has been instrumental for the Linux community and beyond, and for that I am extremely grateful. Though layoffs are obviously tough, I know that many of you will land on your feet, as your incredible skills at Mozilla have not gone unnoticed by the rest of the world.

If you are currently searching for work, please check out the aforementioned Twitter hashtag (#mozillalifeboat) that includes companies specifically looking to pick up the talented Mozilla employees affected by this. Thank you for all your hard work and dedication and I wish nothing but the best for you in your future endeavors.

If you would like to read the official blog post by Mozilla, you can find it here.

The Next PinePhone Has Been Revealed: Manjaro!

The makers of Linux-first hardware, PINE64, have certainly been busy over the last month. From working on elementary OS 6 support to new products in the Pinecil and PineCube, everyone’s favorite ARM-based device company doesn’t appear to be slowing down any time soon (just check out their August 2020 update to see!).

However, they have finally revealed the next-in-line for their very special community edition PinePhones — Manjaro — which joins the successful launches of the previous UBports’ Ubuntu Touch edition and the “flagship” Postmarket OS edition. Certainly, I think most enthusiasts are aware that both UBports and the Postmarket OS team have been working with the PinePhone team since the very early days of the project. However, many might not realize that the relationship between PINE64 and the Manjaro team is nearly as long lived and there is no doubt that the team behind the extremely popular Arch-based distribution has spent an inordinate amount of time making sure the different versions of their operating system work on PINE64’s products flawlessly.

It was just a few months ago that PINE64 announced that Manjaro’s KDE Plasma ARM edition desktop would become the default operating system on their popular ARM-based laptop, the PineBook Pro. Not only does this prove the strong relationship between the two developer camps, but Manjaro is one of the few operating systems with images for nearly all of PINE64’s SBCs and devices. With all the amazing work that the Manjaro team has accomplished, it comes as no surprise that they would eventually get their community edition PinePhone out there in the hands of Linux mobile enthusiasts.

There are currently several different build variants of Manjaro for the PinePhone including support for KDE’s Plasma Mobile, UBport’s Lomiri (once known as Unity8), and Purism’s Phosh (based on GNOME 3). Though it is unclear at this time which one of these environments will ship with the device, or if there will be a choice during the ordering process, you can rest assured that each of these environments are well-tested and some of the smoothest experiences out there for the PinePhone.

As with previous versions of the community edition PinePhones, the Manjaro edition starts at 149 USD including 2GB RAM and 16GB eMMC, with the option of the “convergence” package (first offered with the PostmarketOS build) at 199 USD including 3 GB RAM, 32 GB eMMC, and a special USB-C dock equipped with 10/100 Ethernet, 2 USB type A ports, HDMI digital video output and power-in via USB-C.

Of course, as with all of the community edition PinePhones, PINE64 promises to donate $10 to the development team (Manjaro) for each unit sold. So, if you would like to support the work that both PINE64 and the Manjaro team are doing, while getting an awesome device, this is a great way to show your appreciation. Moreover, it appears that the Manjaro artwork team will be designing a custom presentation box and the phone itself will feature “sleek-looking Manjaro branding on the back-cover.”

Now, if you are rushing to the PinePhone website to get yourself a Manjaro community edition, I’m sorry to tell you that you’ll have to wait just a few weeks before the pre-orders to open, which is scheduled for mid-September.

I want to personally thank the awesome people at PINE64 along with the hard-working team behind Manjaro for working together and putting out some seriously incredible work. The partnership between the two companies has resulted in a plethora of great work, and I for one cannot wait to see what other surprises they have up their sleeves in the future!

If you would like to read the official announcement you can find it on the PINE64 blog or the Manjaro forum.

Birth of the Open Source Security Initiative

The Linux Foundation has been an instrumental organization for open-source software all around the world, including paying the man with the plan — Linus Torvalds — to work full time on the development of our favorite operating system kernel. However, the Foundation isn’t really about promoting open-source software — its about building partnerships with the top enterprise organizations that rely on the stability of Linux and other open-source projects to operate, which includes funding from these enterprise organizations.

For instance, when you look at the list of companies that are members of The Linux Foundation, you will find a Who’s Who of the top tech corporations— Google, Facebook, Microsoft, Intel, Huawei, Oracle, IBM, AT&T, Qualcomm, Samsung, AMD, VMware, GitHub, GitLab, Panasonic, Fujitsu, the Linux-centric companies like Red Hat, SUSE, and Canonical, of course, and a whole lot more. The reason? Linux is vital to their business and infrastructure in more ways than one and it is in their best interest to make sure the project is as good as it can possibly be. The Linux Foundation is the organization that allows that to happen.

Earlier this month, a new initiative was announced by The Linux Foundation, one that deals with an extremely important subject effecting any kind of software — security. From the official announcement:

“The OpenSSF [Open Source Security Foundation] is a cross-industry collaboration that brings together leaders to improve the security of open source software (OSS) by building broader community with targeted initiatives and best practices…

Open source software has become pervasive in data centers, consumer devices and services, representing its value among technologists and businesses alike. Because of its development process, open source that ultimately reaches end users has a chain of contributors and dependencies. It is important that those responsible for their user or organization’s security are able to understand and verify the security of this dependency chain.”

Listen, in the 1980’s, the GNU Project started the free software movement. Back then, the cybersecurity landscape was nothing like it is today (thanks, Internet!). When free or open-source software was first being built, most of the software was built from scratch to resemble components of proprietary software like the GNU C Compiler (gcc) replacing the original UNIX C Compiler (cc).

Today, you would be hard pressed to find a software project or product that doesn’t contain or depend on open-source software somewhere down the stack. Many of the most basic system components that allow the creation of applications on top of applications are built with open-source — and the deeper down the stack that an exploit is found — the more projects it will inevitably effect.

We’ve seen the destruction that can be caused by a flaw in an extremely popular open source technology— one of the bigger and better maintained projects — with 2014’s Heartbleed effecting any project using OpenSSL. This should definitely cause concern in the open-source community. From the new OpenSSF homepage:

“The initial technical initiatives will focus on:

- Vulnerability Disclosures

- Security Tooling

- Security Best Practices

- Identifying Security Threats to Open Source Projects

- Securing Critical Projects

- Developer Identity Verification”

Because open-source projects are, for the most part, created and built in volunteer’s free time, there just can’t be the attention to detail that would happen in a major project that had an entire security team paid to consistently look for vulnerabilities. Sometimes, an open-source project can absolutely explode — causing it to scale up to millions of users in a short period of time. Just look at technologies like Kubernetes, TensorFlow, or even a language like Kotlin, and you can see how a project goes from a few hundred users to literally millions and millions of users in a few years time. The ecosystems around these projects are massive now — and they all rely on the base system for their deepest security.

Unfortunately, there are major issues with The Linux Foundation, namely that it really isn’t an organization dedicated to promoting open-source software or even Linux itself to new or prospective users. The ultimate goal of The Linux Foundation is to garner funding from large companies for continued work on open-source projects and, therefore, those projects become influenced by corporate agendas. That means, they focus heavily on work for Linux as a server system, a base for Android, and other specific corporate interests. Obviously, this has caused a major area of contention within the open-source community.

No matter your view on The Linux Foundation itself, this is a move that has been needed for a long time in the open-source community. Of course, the argument can be made that open-source projects have better security overall because of the ability for anyone to audit and fix the source code — but this only works for major projects, where many thousands of people are actively engaged in them. However, many of the essential lower-level system components don’t always have the mass appeal to new developers as projects like Node.js, Kubernetes, or TensorFlow do.

Take, for instance, the Perl programming language. It is an essential tool used in basically any Linux build (in fact, it’s one of the first pieces of software installed on a Unix-like system), which was one of the most popular languages in existence in the late 1980s through the early 2000s and was instrumental on Unix-like systems as well as the tool that bootstrapped the early Internet. Today, however, Perl is falling out of favor with newer developers due to the rise of other languages like Python and Ruby that are considered more developer friendly. Every Linux distribution and many Unix-like tools rely heavily on Perl, yet with a dwindling number of developers actually looking at the core project, it becomes increasingly open to potentially vulnerabilities.

Having a specific organization that is paid to investigate and audit these critical open-source projects will only make them more secure and reduce the ability for something like Heartbleed to happen once again. I’m happy that this is at least being discussed and some action is being taken to address these critical issues that have the potential to negatively effect billions of users around the world today.

It will certainly be interesting to see exactly what actions the OpenSSF will take and what projects they will prioritize in the massive open-source ecosystem. I only hope that this initiative will help do what it is meant to — ensure better security across the major open-source projects that millions of users depend on–instead of simply fund money for critical infrastructure decided on by the tech megaliths of our day.

If you would like to read the official announcement from The Linux Foundation, you can find it here. If you would like to learn more about OpenSSF, you can find their official website here.

iXsystems: Updated Brand & Some Cool New Hardware

When many Linux enthusiasts or IT hobbyists think of creating a personal storage solution with a Network Attached Storage (NAS) system, the first that inevitably comes to mind is the highly-popular FreeNAS. FreeNAS is a free and open-source NAS operating system that is based upon FreeBSD and uses the OpenZFS file system — one of the top tier and most popular file systems in the world today when it comes to making sure your data is safe.

Though the FreeNAS project was originally created by Olivier Cochard-Labbé in 2005, today it is openly developed by iXsystems, a company specializing in FreeNAS and accompanying hardware that ranges from a few hundred dollars for a high-quality personal NAS to tens of thousands for complete enterprise-grade servers. But, FreeNAS is more than an operating system backed by a company — it is the most popular option for those building “homelabs” all across the world.

In iXsystem’s model, they have different tiers of products, with FreeNAS being the free and open-source product. However, they also sold products that included support, which used the TrueNAS brand and was built by some of the very talented developers of the once-popular desktop-focused BSD distribution, TrueOS.

After many years of this naming scheme, it appears that iXsystems recently decided to streamline their product portfolio, with what they refer to as the TrueNAS Open Storage model. The first move that was made, was changing FreeNAS’ name to TrueNAS CORE. Though some might complain, this is merely a name change and nothing more. TrueNAS CORE, starting with the 12.0 release, will continue to provide the same free and open-source operating system that FreeNAS was.

Consequently, iXsystems are building two different products with TrueNAS CORE…well..at their core. The second offering is for enterprise customers and is aptly named TrueNAS Enterprise, which is based on the previous TrueNAS edition supplied by iXsystems. From the official TrueNAS website, the enterprise edition will include everything in TrueNAS CORE along with:

- Dual Node Edition

- Unified Storage

- High Availablity

- Plugins & VMs

- Professional support

Sounds pretty normal for an open-source company right? Well, they have actually gone a bit further to allow TrueNAS to work in large storage environments like data centers. This addition is aptly named TrueNAS Scale, and includes everything in the TrueNAS Enterprise edition, along with:

- Multi-Node Edition

- Scale-out Storage

- Containers and VMs

- Based on Linux instead of FreeBSD

Now, while CORE and Enterprise are currently available, TrueNAS Scale is still in heavy development at the time of this writing, but it will definitely be interesting to see if it has the ability to break into the large-scale storage sector, which can be quite competitive.

On top of this entire reworking of the company’s products, iXsystems has recently put out the first version of their FreeNAS 11.3 replacement, TrueNAS CORE 12.0, for beta testing. The improvements made over FreeNAS 11.3 have boosted performance significantly along with the inclusion of OpenZFS 2.0. From the iXsystem official blog post:

“TrueNAS 12.0 BETA2 is now available for testing with almost no functional changes, but is up to 30% faster for many use cases!…

For the first time, TrueNAS demonstrated over 1 million IOPS and over 15 GB/s on a single node! We’ll share more about that system and its configuration soon. This release has been stress tested in both TrueNAS CORE and Enterprise forms on all the X-Series (X10 and X20) and M-Series (M40 and M50) platforms.”

Much of this speed up comes down to a ton of new functionality in OpenZFS 2.0 and includes Non-Uniform Memory Access (NUMA), file system metadata stored on flash, fusion pools, persistent L2ARC, asynchronous Data Management Units (DMU) and Copy-on-Write (CoW), increase in maximum record size, checksum vectorization, asynchronous TRIM, faster boot times, deduplication improvements via using flash instead of HDDs, and faster iSCSI reads.

Wow, that’s a mouthful! But wait, that’s not it you say? Even with the impressive results given by iXsystems, there is even more news coming out of the open storage company — new hardware!

Indeed, it looks like iXsystems is updating their product line and the first new piece of hardware was announced recently as the fastest OpenZFS storage system in existence. The TrueNAS M60 is a wonderful piece of gear targeted at enterprise customers that includes over 20 GB/s and 1 million IPOS as well as options for 20 Petabyte hybrid solution or 4 Petabyte all-flash capacity, 1.5 TB of RAM, 64 CPU cores, 128 GB of NVDIMM fast write cache, and 8 100 Gbe with 12.8 TB NVMe flash tier. Now that’s what I call some serious storage!

However, iXsystems knows their audience and wasn’t about to not throw a bone to the personal storage enthusiasts out there. Consequently, their latest announcement is regarding their popular TrueNAS Mini line with the reveal of the Mini X and Mini X+. From their official blog post:

“The TrueNAS Mini X+ includes 2x RJ45 10 GbE ports, 8 cores, between 32–64GB of DDR4 ECC memory, USB 3.1 connectivity, and 1x RJ45 IPMI remote management interface. With high bandwith networking and optional flash capacity, this system is capable of supporting video editing, data backup, and file sharing applications with up to 2GB/s of bandwith at less than 100W per unit, fully-loaded with drives.

The TrueNAS Mini X includes 4 x RJ45 GbE ports, 4 cores, between 16–32GB of DDR4 ECC memory, USB 3.1 connectivity, and 1 x RJ45 IPMI remote management interface. The system uses less than 80W of power.”

The TrueNAS Mini X starts at $699, but obviously can be upgraded to the customer’s content (within physical capabilities). Compared to some other complete NAS solutions out there, you will find that you get what you pay for. There are definitely cheaper options out there (and, obviously building your own is the cheapest), however, if you are serious about your data storage needs and want a quality piece of hardware made by an awesome company that was born to run TrueNAS, this is definitely an option you should look at.

I want to congratulate iXsystems for all of these exciting announcements and I can’t wait to give TrueNAS CORE 12.0 a try when it comes out of beta. It looks like iXsystems is really focusing on their prime market and continue to release high-quality free and open-source software while doing so. It will be interesting to see where the company ends up standing compared to competitors as some time passes. Good luck, iXsystems, I know I’ll be keeping my eye on your blog for quite a while!

If you would like to check out iXsystems, you can find them on Twitter or their official website. In addition, if you would like to check out the new TrueNAS website (including information about the aforementioned hardware), you can find it here along with the download page to try out TrueNAS CORE 12.0 beta here. To keep up to date with information as it rolls out, follow their official blog here.

Community Voice: Sean Rhodes

This week Linux++ is very excited to welcome Sean Rhodes, Technical Project Lead at Star Labs Systems. Star Labs is a newer Linux hardware company that was founded in a pub in 2016 by three friends who wanted a better computing experience for their hardware on Linux.

Today, Star Labs has grown to offer two major laptop products: the Star LapTop Mk IV and the Star Lite Mk III. Both machines were designed from the ground up instead of rebranding different chassis like those from Clevo. The company also offers one of the largest variety of Linux distributions pre-installed in the hardware world including Ubuntu, Linux Mint, Zorin OS, Manjaro, elementary OS, and MX Linux. So, without further ado, I’m happy to present my interview with Sean:

How would you describe Linux to someone who is unfamiliar with it, but interested?

“A regular question that gets asked when I say I work for a company that makes Linux laptops! I say, ‘it’s an alternative to Windows and macOS’. There are so many aspects of Linux that can overwhelm someone who isn’t familiar with it, be it the 330+ distributions, the fact that it’s open-source, or that it has a terminal.”

What got you hooked on the Linux operating system and why do you continue to use it?

“The first time I ever installed Linux was due to curiosity. I was hooked on the amount of choice available, and now, it’s both for professional and personal use that I still use it.”

What do you like to use Linux for? (Gaming, Development, Casual Use, Tinkering/Testing, etc.)

“All of the above, really! I can’t remember the last time I used Windows or macOS.”

Do you have any preferences towards desktop environments and distributions? Do you stick to one or try out multiple different kinds?

“This question comes up here a lot (surprisingly) and my favourite has always been [Linux] Mint. I’m the only one here who’s favourite is Mint, so I get asked, why?

I have the strangest analogy; I’ve always thought of Mint like a 15-year-old Labrador. It’s not particularly fast, it’s not the best looking, and teaching it new tricks is a lot of work. But…it’s dependable, faithful to what it is, easy, and you just love it.

Working at Star Labs, we jump between distros regularly, so I don’t stick with one. I probably use Ubuntu, Manjaro, and Fedora the most.”

What is your absolute favorite aspect about being part of the Linux and open source community? Is there any particular area that you think the Linux community is lacking in and can improve upon?

“I love the way the community approaches new things and this is mainly because there isn’t a ‘correct’ approach or objective. Even if a strange idea is put to the community, such as having two docks, the community will respond with multiple ways of doing it (and probably a way of having three docks).”

What is one FOSS project that you would like to bring to the attention of the community?

“I’ve followed eXtern OS for a few years now. It’s both intriguing and beautiful. As far as I know, nothing like this has ever become mainstream, so it would be very interesting if it did.”

Do you think that the Linux ecosystem is too fragmented? Is fragmentation a good or bad thing in your view?

“I wouldn’t say it’s too fragmented, but it definitely is fragmented. On the whole, I think it’s good–it would be very boring if the world looked like the inside of a Starbucks with Apple logo’s glowing at you from every direction.

The fragmentation gives us a lot of choice, and for once, I don’t think it’s bad–maybe there is a missed opportunity. Imagine putting the developers from the best Linux distro’s in a room and combining them into one. It would be amazing, but it would also null-and-void the reason they exist.”

What do you think the future of Linux holds?

“I think that the main thing that will come in the future is more awareness and popularity. Strangely, some of this is due to Microsoft, but there’s definitely been an increase of interest in alternatives to Windows and macOS.”

What do you think it will take for the Linux desktop to compete for a greater share of the desktop market space with the proprietary alternatives?

“Maybe I’m biased, but I think it’s more companies offering it pre-installed. It almost circles back to the first question, as many people haven’t heard of Linux. If it was available in stores, alongside Windows and macOS, it would level the playing field from the start.”

Can you tell us a little bit about the history of Star Labs Systems, how it was conceived, how it grew, and where you think it is positioned today?

“It was conceived in a pub by three people who wanted laptops for themselves that worked well with Linux. It really just grew by word of mouth, as we didn’t even run any marketing until about three months ago.

We had a brilliant comment on Facebook the other day, which is possibly the best way you could describe Star Labs today–‘This is what happens when nerds create a computer manufacturer. A bunch of pop culture references and a solid looking machine spec-wise for a decent price’.”

What is your favorite part about working for a company like Star Labs?

“Essentially, we get to play with toys! Granted, there is a lot of work involved, but when you break it down, all we do is continually test and develop in the endless pursuit of the perfect laptop.”

Recently, you’ve announced partnerships with some popular distributions that have never been offered as a pre-installed option on hardware before. What factors come into play when you’re deciding on which distributions you would like to offer pre-installed? Is there any work that goes into tweaking the hardware or software for each particular distribution that you support?

“It’s a combination of requests, what the distribution is built for, and there has to be an OEM installer.

Using Arch as an example as we’ve had a lot of requests to offer this pre-installed, but on some level, pre-installing it defeats the point of Arch. Whereas pre-installing Manjaro, which focuses on making things easier and more automated, aligns with what we offer.

One of the main reasons of Star Labs is that the laptops work well with any Linux distribution, so nothing needs to be changed for any distribution to work well. Some of the pre-installed images do contain a few tweaks here and there.”

What are some unique aspects of Star Labs’ computers that you think set them apart from not only other Linux-focused manufacturers, but the greater hardware manufacturing world in general?

“The main thing we are known for is that our laptops are built specifically for Linux, so they offer the unrivaled level of compatibility and firmware updates via the LVFS. Aside from that, they offer a lot of small things that tailor the experience to the Linux user. I don’t know many people who use Windows that would ever need to temporarily disable their trackpad, but for a developer (who lives in the terminal), switching off the trackpad for 10 minutes with the press of a button is incredibly useful. There’s a host of features like this that really do make the laptops enjoyable to use.”

Is there anything exiting in the works regarding the future of Star Labs Systems, without giving too much away?

“I honestly find all of it exciting! The blue braided cables and blue protective sleeves were the highlights of the new laptops for me. As for things still to come, we’ve got Coreboot coming soon and the 15″ Star LapTop Pro is coming back later this year.”

Do you have any major personal goals that you would love to achieve in the near future?

“I’d like to teach the dog to speak in full sentences.”

I just want to wholeheartedly say thank you to Sean for taking time out of his extremely busy schedule to prepare an interview with Linux++. It is really incredible to see the growth of Star Labs Systems and their approach to Linux-first hardware. I expect to see a lot more news surrounding the company in the coming years and wish them nothing but the utmost success in their future endeavors!

If you would like to keep up with the latest news from Star Labs Systems you can find them on Twitter, Facebook, Instagram, or their official website.

Exploring the Linux Ecosystem

This week, we’re going to take a look at one of the most important aspects of computing–storage and, in particular, file systems. With all the buzz around Btrfs being integrated as the default file system in the Red Hat-sponsored, Fedora, I thought it might be a good time to explore the different kinds of file systems one can utilize with Linux, their benefits and limitations, and where you might normally see them. I hope you enjoy!

The Many Faces of the Linux File System

Though there are a vast array of file systems available to use with the Linux kernel today, there are really only a few that see heavy usage from the Linux community. We’ll take a look at a few of the most popular file systems in use today as well as an up-and-coming file system that I’ve had my eye on for quite a while!

Extended File System, version 4 (ext4)

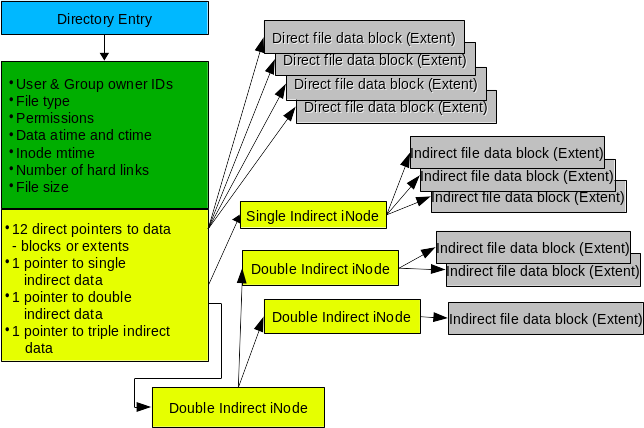

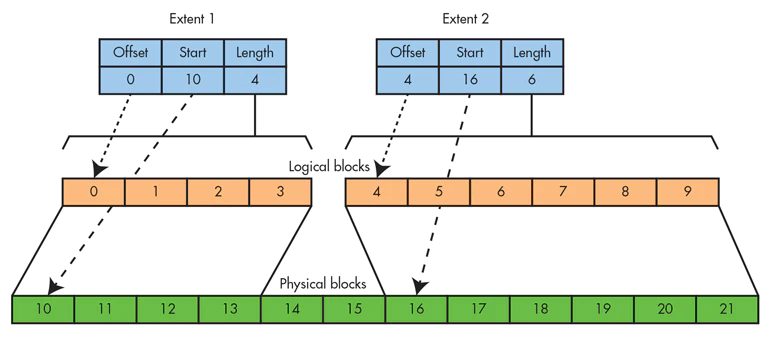

The ext4 file system is without a doubt the most popular choice for file storage in the Linux (especially, desktop) world. ext4 is the latest generation of the Extended File System family, with both ext2 and ext3 being used heavily prior (and even still today in some instances). Developed by long-time Linux kernel developer, Theodore Ts’o, ext4 was introduced as stable in October 2008, using speedy linked lists and hashed B-Trees as the base data structure.

ext4 is what is known as a journaling file system, which means that it uses a “journal” (usually a circular log) to record the intentions of changes that are not yet committed to the file system. This feature allows for users to retain data in the event of a crash or unexpected shutdown.

If you have installed a Linux distribution with the default settings in the installer, chances are extremely high that you’ve been using ext4 all along without even knowing it. This is because ext4 is the default file system for the vast majority of Linux distributions on the planet. Though even T’so himself has said that ext4 isn’t the future of file systems–those rights are reserved for the likes of Btrfs or ZFS–what functionalities ext4 lacks it more than makes up for in pure speed and reliability.

In the beginning, the early versions of Linix used a modified version of the MINIX 1 file system. However, the MINIX file system ran into a bunch of limitations and many of the early kernel developers had laid out plans to replace it very early on. The first attempt by T’so was the original Extended File System (ext). ext became the very first file system to utilize the Virtual File System (VFS) layer in the Linux kernel–which is an extremely important aspect of the kernel that allows so many different file systems to work with it.

However, the way that ext was built gave rise to a whole bunch of other issues compared to the MINIX file system. Though ext fixed the limitations of the MINIX file system, it contained other problems like the lack of support for separate timestamps according to file access, inode modification, or data modification.

In order to fix the problems with ext, T’so began working on ext2, which drew a lot of inspiration from the Berkeley Fast File System (also known as, the Unix File System or UFS), which was utilized in many different Unix and BSD operating systems (and still is today in the case of BSD). With these new improvements, ext2 quickly became a household name for early Linux adopters.

As the years rolled on, an extraordinary amount of work was put into the next iterations of the Extended File System family, ext3 and ext4. However, even with newer versions of the file system family, all three are still used in different situations today, though ext4 is recommended for most use cases. Today, ext4 is still the most popular Linux file system, even above more advanced kinds, like Btrfs or ZFS. I think it is pretty safe to say that this file system will be around for a very long time!

X File System (XFS)

Initially developed by yesteryear’s tech megalith, Silicon Graphics Inc. (SGI), XFS was originally conceived for SGI’s own commercial UNIX variant, IRIX, in 1993. The main goal? High performance, high reliability, and better parallel performance. As Linux began to gain enterprise clout throughout the 1990s, it was clear that SGI should make their file system available to the up-and-coming UNIX competitor. In 2003, XFS was ported to the Linux kernel by Steve Lord and saw its first inclusion into a Linux distribution in 2001. In mid-2002, Gentoo became the first distribution to allow XFS as the default file system through an option flag in the install process.

Today, XFS can be utilized in any flavor of Linux due to its integration in the mainline Linux kernel and remains one of the most actively developed file systems in the kernel. Like ext4, XFS is a journaling file system with a focus on speed due to parallel input/output operations–a feature based on allocation groups within its architecture. XFS also employs metadata journaling as well as write barriers in order to ensure the consistency of data held in the B+ Trees that make up its main indexing structure.

XFS has a large amount of developer support behind it and is utilized heavily in many areas of enterprise. Though it lacks the functionality of file systems like Btrfs and ZFS, the roadmap for XFS contains a lot of very exciting new features including snapshots, Copy-on-Write (CoW) data, data deduplication, reflink copies, online data and metadata scrubbing, accurate reporting of data loss and bad disk sectors, and significantly improved tooling for reconstruction of damaged or corrupted file systems.

This work is very difficult because in order to do it, the on-disk format of XFS must be changed, which is very difficult to do with backwards compatibility in mind. It will definitely be interesting to see how the XFS developers begin implementing these advanced features and if they will be able to keep up the speed and reliability that XFS has long been known for.

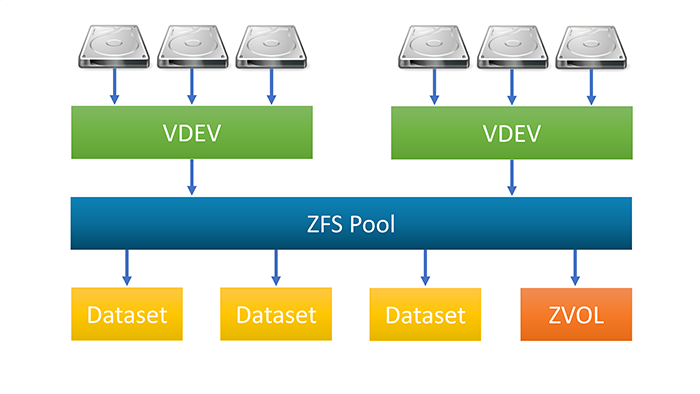

Z File System (ZFS)

Originally created by Sun Microsystems in 2006 via a team lead by Jeff Bonwick, ZFS is considered by many to have been the first of the “next generation” file systems, including advanced features like full file system snapshots, per-block checksumming, and self-healing properties. Originally coined as the “Zettabyte File System”, that name has been dropped in favor of the simpler “Z File System” moniker. Though ZFS was created as part of Sun Microsystem’s proprietary UNIX offering, Solaris, it would eventually make its way into the open-source world via the decision by Sun to open-source most of Solaris codebase, including ZFS, through the OpenSolaris project.

Unfortunately, with the acquisition of Sun by Oracle in 2010, OpenSolaris became closed source as well as the ZFS file system. Fortunately, engineers who had worked on Solaris and OpenSolaris kept the open-source code alive with initiatives like OpenIndiana and the illumos project. In order to coordinate and improve upon the last available ZFS code, a initiative known as OpenZFS was started and grew into a major hand in helping bring the possibilities of ZFS to other operating systems besides OpenIndiana. The very strong initiative by the OpenZFS developers to bring ZFS to the Linux kernel birthed the now-popular ZFS on Linux (ZoL) codebase.

Now, unlike the other file systems on this list, ZoL is actually not provided as part of the mainline Linux kernel. This is due to the CDDL license that Sun Microsystems used to open-source Solaris and its unfortunate incompatibility with the Linux kernel’s GPLv2 license. Considering that Oracle, a long-time enemy of the open-source movement (which have been known to litigate), has the rights to the proprietary ZFS file system, Linus himself has stated that ZFS will never be integrated into the mainline kernel barring written consent from Oracle’s CEO.

Even so, the OpenZFS project maintains the ZoL project, which allows Linux users who want to leverage ZFS do so by incorporating it into the kernel via a DKMS (Dynamic Kernel Module Support) module. Though, this isn’t recommended for new Linux users, there are many threads on the topic just in case you’d like to see what ZFS has to offer on your Linux distribution.

More recently, ZoL support became an experimental feature integrated into the installer of Canonical‘s Ubuntu, the most popular and widely used desktop Linux system in the world, with the 19.10 “Eoan Ermine” release. Though many questions have been raised about the true legality of this inclusion, Canonical has publicly stated that they have had some of the top experts in software law review the possible case for utilizing ZoL and have said that they cannot see any way that it could be used against them by Oracle or anyone else for that matter.

The main advantages of ZFS include pooled storage for integrated volume management, copy-on-write data and metadata, snapshots, data integrity verification, self-healing properties, RAID-Z configurations, internal compression, end-to-end checksumming, a massive maximum file size (16 Exabytes) and total storage size (256 Quadrillion Zettabytes), as well as no limit to the number of datasets or files available among other advanced features.

ZFS is a massive codebase with an incredible history of robustness and innovation behind it. Unfortunately, the licensing issues surrounding it will hold it back from mainline adoption, but it is still at the heart of many enterprise based storage solutions today. In fact, the most popular Network Attached Storage (NAS) operating system in the world, FreeNAS (now TrueNAS CORE) utilizes OpenZFS with a stripped down version of FreeBSD.

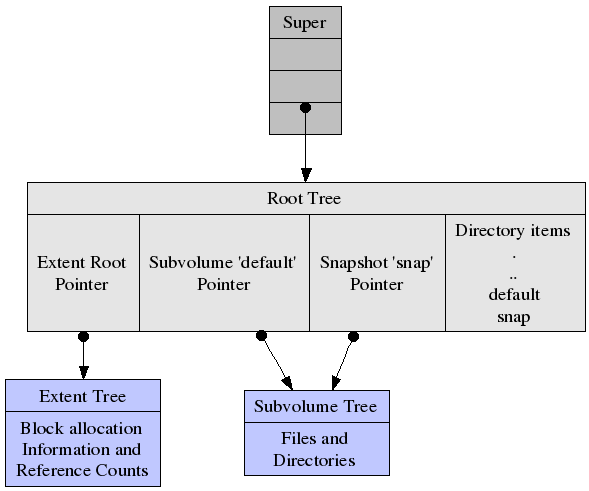

B-Tree File System (Btrfs)

A proposition by Ohad Rodeh at The Advanced Computing Systems Association (USENIX) in 2007, brought the CoW (Copy-on-Write) B+ Tree data structure into public attention. Though the b-tree data structure was originally created over 45 years ago (in 1975) and has gained considerable use in computer science since, the CoW aspects of this novel implementation sparked the idea of a new file system built around it in the mind of SUSE employee, Chris Mason, who had been working on their ReiserFS project for some time.

Mason left SUSE for Oracle to begin building Btrfs (often pronounced “Butter FS” or “Better FS”) as a possible open-source replacement next generation file system to rival the popular and extremely advanced ZFS. The goal of Btrfs was stated by Mason in the early days of development:

“[The goal was] to let [Linux] scale for the storage that will be available. Scaling is not just about addressing the storage, but also means being able to administer and manage it with a clean interface that lets people see what’s being used and makes it more reliable.“

Therefore, Btrfs has constantly attempted to achieve “feature parity” with its main competitor, ZFS. Btrfs includes many of the favorable features found in ZFS, with a few of it’s own including being an extent-based file system, dynamic node allocation, writeable snapshots, subvolume integration, checksumming on data and metadata, internal compression, integrated multiple device support, incremental backup, self-healing properties, out-of-band deduplication, swapfile support, SSD (flash storage) awareness, and offiline file system checks among other advanced features.

It took two years for Btrfs to be accepted into the mainline Linux kernel after finalizing its on-disk format. In its earliest years, Btrfs was plagued by bugs and was not considered a reliable file system to use. However, many major open-source companies saw the potential of having a next generation file system that could match ZFS and Btrfs really started gaining momentum from the likes of SUSE, Oracle, Red Hat, and, more recently, Facebook. Due to this enterprise backing, Btrfs began to develop extremely quickly and looked to soon become the default file system for Linux.

However, as Btrfs grew, more and more problems were found in its underlying architecture, and just as it was supposed to be ready for prime time in around 2015, it was clear that much more work was needed before it could be claimed reliable. The Linux community became split on Btrfs, with Red Hat deciding to remove it from their flagship Red Hat Enterprise Linux 8 release. On the other hand, SUSE adopted Btrfs as its default file system in their flagship offering, SUSE Linux Enterprise Server, as well as the community-run open-source implementation, openSUSE.

Today, there is no doubt that Btrfs has advanced incredibly since that time of uncertainty. SUSE has been working on the project as well as using it as the default file system option for going on five years. Facebook, a company that ingests a massive amount of data each and every day has chosen Btrfs as their storage file system. And, more recently, the Fedora community has shown its support for Btrfs by voting on the use of it as the default file system in Fedora 33 in place of ext4.

I think it is safe to say that Btrfs will grow in adoption more and more over the years as the major Linux companies begin throwing their weight behind it (as with many other components like systemd or Wayland). With the addition of Btrfs as Fedora’s default file system, we may even see it creep in as the default in RHEL 9, when that is launched. It is definitely an exciting time for the Btrfs team and I know I am looking forward to seeing the potential and possibilities that it will provide as intensive development continues.

Block Cache File System (bcachefs)

In 2015, Linux kernel developer, Kent Overstreet, announced a project that he had been working on for some time–a new filesystem named Bcachefs. Built upon a cache structure in the Linux kernel’s block layer, bcache, the goal of the project is to provide a file system that includes many of the advanced features of next generation file systems, like Btrfs and ZFS, while keeping competitive performance of more stripped down file systems, like ext4 and XFS.

Though the project is still young (it only has 5 years of development behind it as opposed to 14 and 13 years for ZFS and Btrfs, respectively), Bcachefs has shown some extremely promising results early on. At the moment, Bcachefs is not part of the mainline Linux kernel, but according to Overstreet, it is very close to hitting that goal in the near future. Recent testing by community members like Michael Larabel (Phoronix) have shown that Bcachefs has very promising results when compared to the other popular Linux file systems, however, not all features have been implemented in their entirety. The advanced features that will make their way into Bcachefs include:

- Copy-on-Write (CoW)

- Full data and metadata checksumming

- Multiple devices, including other types of RAID

- Caching

- Compression

- Encryption

- Snapshots

- Scalability

- Erasure Coding and much more

It is safe to say that Bcachefs is an extremely intriguing up-and-comer with a solid foundation and extremely well thought-out design that boasts one of the cleaner codebases (though it is still young ;)) out of all the file systems. Bcachefs is currently being developed primarily by Overstreet, who left his career at Google to work on the file system full-time, with the help of a few developers as well as a small, but dedicated group of testers. Because Overstreet is working on Bcachefs full-time, the project is funded by his Patreon page that can be found here.

Personally, I can’t wait for Bcachefs to be merged into the mainline kernel to make it easier to test and utilize. I hope to see this advanced file system make a name for itself in the coming years and become a major competitor to other next generation file systems like Btrfs and ZFS.

Of course, there are many other file systems supported by the Linux kernel including JFS, ReiserFS, 9p, AFS, BFS, FUSE, RamFS, F2FS, and even Microsoft’s exFAT and NTFS file systems, however, the above are the most commonly seen in the Linux world.

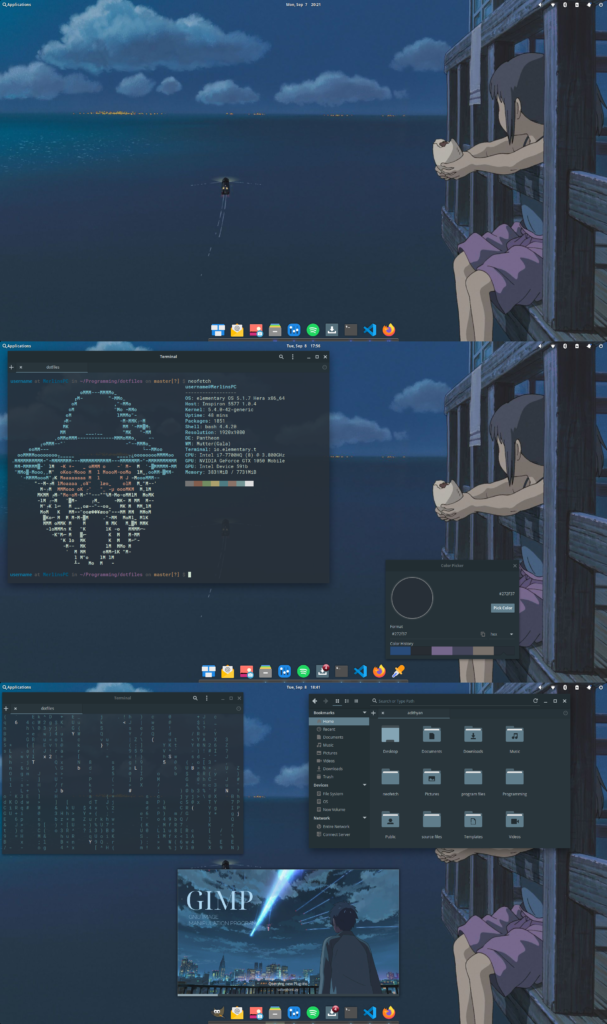

Linux Desktop Setup of the Week

This week’s selection was presented by u/Frillon_Freud in the post titled [Pantheon] Elementary OS. First Rice. Here is the screenshot that they posted:

And here are the system details:

OS: elementary OS 5.1.7 WM: Mutter (Gala) Shell: bash 4.4.20 Terminal: io.elementary.t GTK Theme: Adapta-Bluegrey-Nokoto-Eta Icons: ePapirus Neofetch Art: Custom ASCII Art

Thanks, u/Frillon_Freud, for an awesome, unique, and well-themed Pantheon desktop!

If you would like to browse, discover, and comment on some interesting, unique, and just plain awesome Linux desktop customization, check out r/unixporn on Reddit!

See You Next Week!

I hope you enjoyed reading about the on-goings of the Linux community this week. Feel free to start up a lengthy discussion, give me some feedback on what you like about Linux++ and what doesn’t work so well, or just say hello in the comments below.

In addition, you can follow the Linux++ account on Twitter at @linux_plus_plus, join us on Telegram here, or send email to linuxplusplus@protonmail.com if you have any news or feedback that you would like to share with me.

Thanks so much for reading, have a wonderful week, and long live GNU/Linux!

You are awesome.

First the history of Linux and now an overview about Linux filesystems. I enjoyed this issue.

Excellent post.

Amazing that you find time to do anything else, with these extremely thorough and insightful articles.

Join the discussion at forum.tuxdigital.com